Next Gen Assistant

The growing improvement in AI and the ability to understand people has made virtual assistants already a standard in our world and more and more companies are using them as a tool to provide assistance to their users.

Factors such as low cost, high availability and easy maintenance make them especially attractive in the support area of a company, improving the satisfaction and the relationship between user and company.

However, a misused assistant creates a negative feeling in a user's experience, causing frustration and abandonment. So, what factors must be taken into account when developing a good virtual assistant?

In order to have a working example, a virtual assistant has been developed with everything that is discussed in this blog post. You can find the link below, after reading all the content ;D

Technology

Angular 7.2.0

NestJS 6.0.0

IBM Watson

Motivation

Currently, the normal flow of interaction between a user and a virtual assistant is that the user wants to perform a certain action and the assistant tries to help him by providing the necessary information. Two very common patterns can be observed:

-

Ask the bot to do it: The bot gets all the necessary information to carry out an action and performs it automatically.

-

Ask the bot how to do it: The bot gets all the necessary information to carry out an action and indicates the steps to perform it.

These two solutions allow the user to solve their problem. However, depending on the application, it is possible that what interests us is that the user is familiarized in an easy and intuitive way with our tool, that is, that the user learns to solve the problem.

Give a Man a Fish, and You Feed Him for a Day. Teach a Man To Fish, and You Feed Him for a Lifetime.

We can take the assistant one step further and make him take control of the entire application to show the steps that should be followed to achieve the goal that the user wants. In this way, a fast and simple learning process is achieved and therefore greater satisfaction in the user is obtained, who has not only managed to solve a problem, but has learned to solve it.

Cool, we can do this! Nevertheless, we should also take into account a couple of bad habits.

Bad habits

- Intrusion.

The user enters a web or mobile application and the virtual assistant is automatically opened or a high-level icon appears somewhere so that users have to press it and open the assistant.

This behavior is too intrusive for the user, who probably has not had time to see what the application is and can feel from the beginning bombarded. The first thought that comes to his mind is "get out of here" and closes the assistant without even looking at it, as if it were advertising (and we all know that advertising is not cool). If the average time spent on a website is 40 seconds we have consumed a few without contributing more than anger.

The best way to help a user is when he needs it. This is why the most satisfactory result of a virtual assistant occurs when it is the user who seeks for help and chooses the option of the assistant.

- False human.

The user is talking to the assistant, who has a photograph and name as if it were a person, and there are delays in the conversation and behaviors that emulate the human.

While it is true that when the virtual assistant is very elaborate can simulate a human in short conversations, in the vast majority of cases the bot is detected. This produces a sense of deception in the user who expected more dedicated attention to his needs.

Giving a humanized user experience is advisable, but it is important that the user knows at all times that he is talking to a bot. The user should be the one who chooses if he wants to continue using it or prefers another method of assistance.

Let's go back to the example!

Architecture

Frontend

Made with Angular 7.2.0, its design tries to imitate what would be a t-shirt shop with a virtual assistant, in an extremely limited way.

The peculiarity of this website is that it allows to show a set of steps to be taken when a bot action is received, and which may be different depending on the viewport of the device used. The user will have to follow these steps in order to carry out the action.

Backend

Made with NestJS 6.0.0, it allows secure communication between the frontend and IBM Watson services.

Hosted in IBM Cloud (Lite), it is basically an api which can be accessed to initialize a chat or send new messages that must be processed. It is also responsible for serving the frontend as a static website.

IBM Watson

There is an enormous amount of AI services available such as translators, text to speech, speech to text, tone analyzer, visual recognition, classifiers and more. You can see a list with all the resources in the IBM Cloud Catalog

For this post, the only thing we are going to use is the Watson Assistant (Lite) service that allows you to create conversational interfaces in a simple way. The lite plan offers 10,000 messages per month for free (use it with caution).

Our assistant has been trained to recognize only two simple intents:

-

Buy a t-shirt: It will recognize any message that intends to buy a t-shirt. In order to perform the action, the assistant will look for the color and size in the message. If it finds them, it will proceed to perform the action. If he does not find them or only finds one, he will proceed to ask for them. Once the assistant has all the information, it will indicate the steps needed to buy a t-shirt with the desired parameters.

-

More information: It will recognize any message that asks for more information and will ask us to go to the More tab.

Next, we will show how we have trained the assistant to achieve this behaviour.

Watson Assistant

Watson Assistant allows the creation of several assistants with one or more skills. Skills are all parameters with which the assistant can act to elaborate a dialogue. All the skills are divided into three sections:

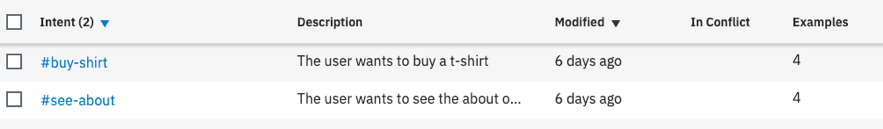

- Intents: Consists of the final intention of the user, extracted from the message. In our case we have two: # buy-shirt and # more-info.

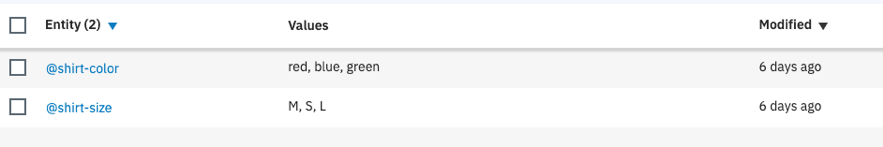

- Entity: Consists of the values associated with possible targets. There are several pre-generated entities such as @sys-date for dates, @sys-person for names, among others. In our case, we have generated two: @shirt-color with the values red, green and blue and @shirt-size with the values M, S, L and their synonyms Small, Medium, Large.

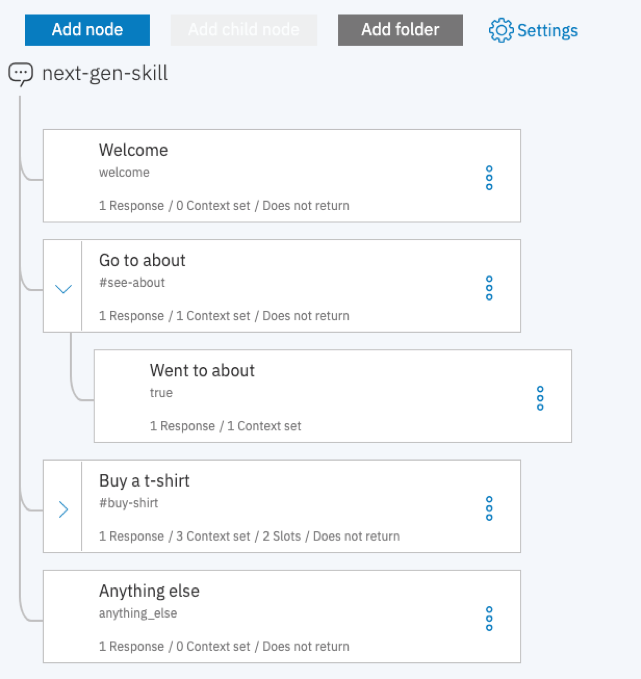

- Dialog: Consists of the tree nodes that the assistant will use to make the conversation. In our case we have 4 nodes, the welcome node, the default node and one node per entity. These entity nodes have subnodes for each of the steps required by each of the associated actions. Reaching the end of each of these nodes means that the client has followed all the steps indicated on the web page.

The goal of this project is to continue improving it with new features provided by the AI and to explain this evolution with new blog posts. Explaining step by step how our assistant is acquiring intelligence.

For example, we can hook the Speech to Text service to convert speech to text, Language Translator to translate to a common language, Tone Analyzer to see the mood of the user, Watson Assistant to create a dialogue and Text to Speech to generate audio with the assistant's message text.

Another examples could be using Visual Recognition with a classifier to recognize objects that are present in photographs or Discovery to analyze and create knowledge from a large number of text documents.

Example: T-Shirt store

Once seen how this assistant is mounted, it is time to see the example. Recall that this assistant has been trained just to create a t-shirt and to be asked for more information. Although it is extremely silly, it should be enough to show the fundamentals.

The example can be found here (I'm sorry that the web is so horrible, didn't want to lose too much time).

I hope you liked it and that this post was useful for everybody. Who knows? Maybe one day we will see something similar somewhere in Flowable or mimacom ;)