Alfresco Photo Analyzer Module

About the module

I'm going to explain the project that we developed for the last Global Hack-a-thon. The purpose of this project was simply to develop something different using Alfresco as content management and integrate it with external processes or tools. This Alfresco module analyze people photos and extract information about the gender, age, face expressions, emotions and others. So you can make a bulk photo import to Alfresco, and then make searches(for example, to know how many 30 age people photos are in the repository).

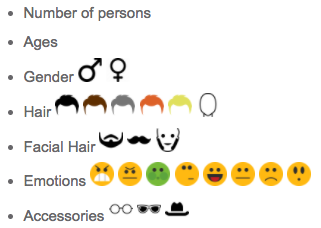

The list of possible information to extract from a photo is:

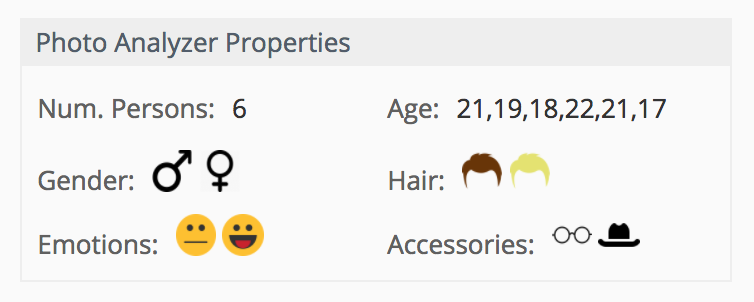

The result you will see is a box with the properties extracted from the metadata photo.

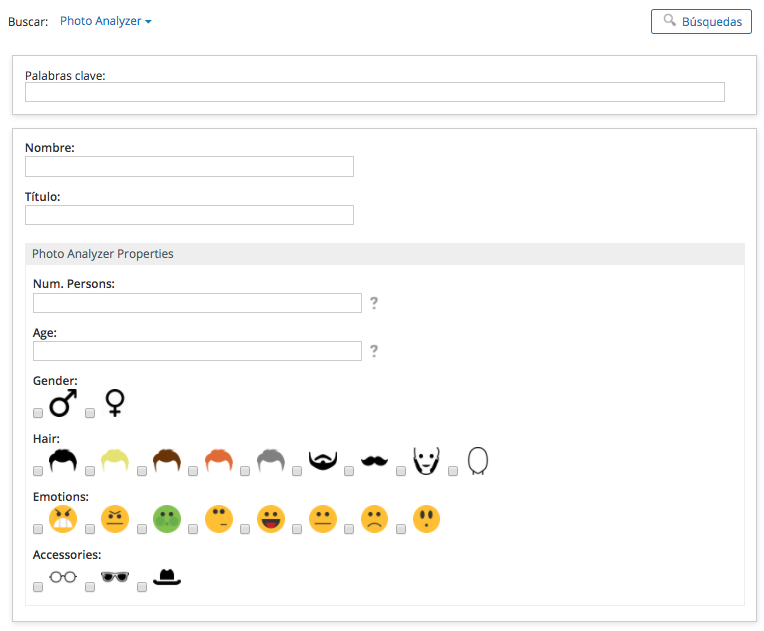

And a friendly search interface.

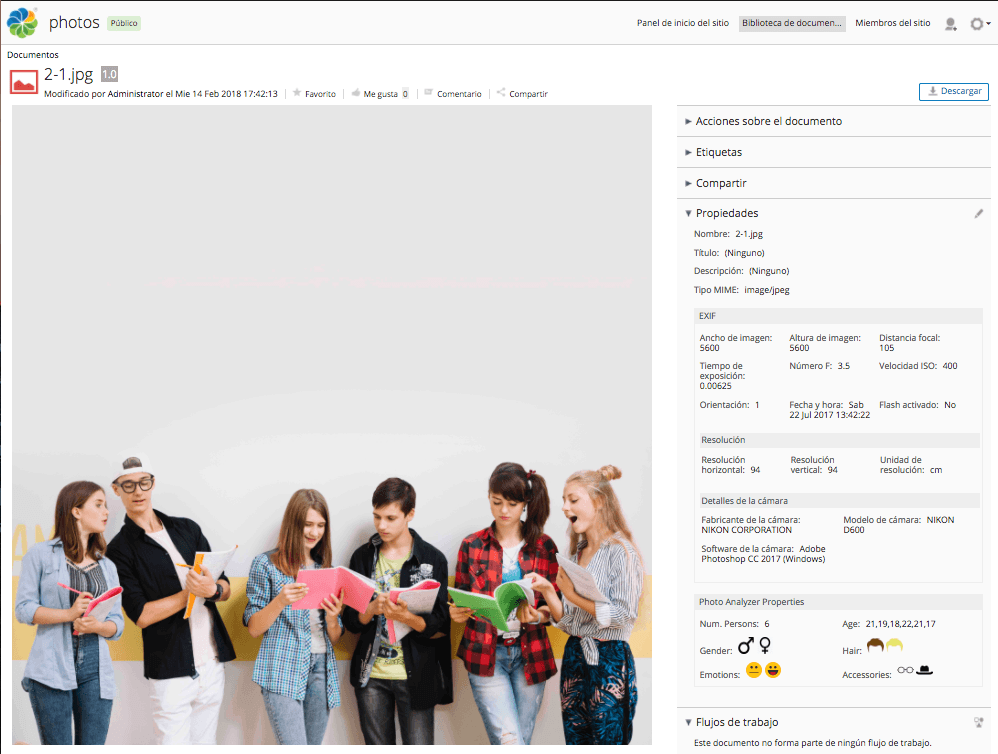

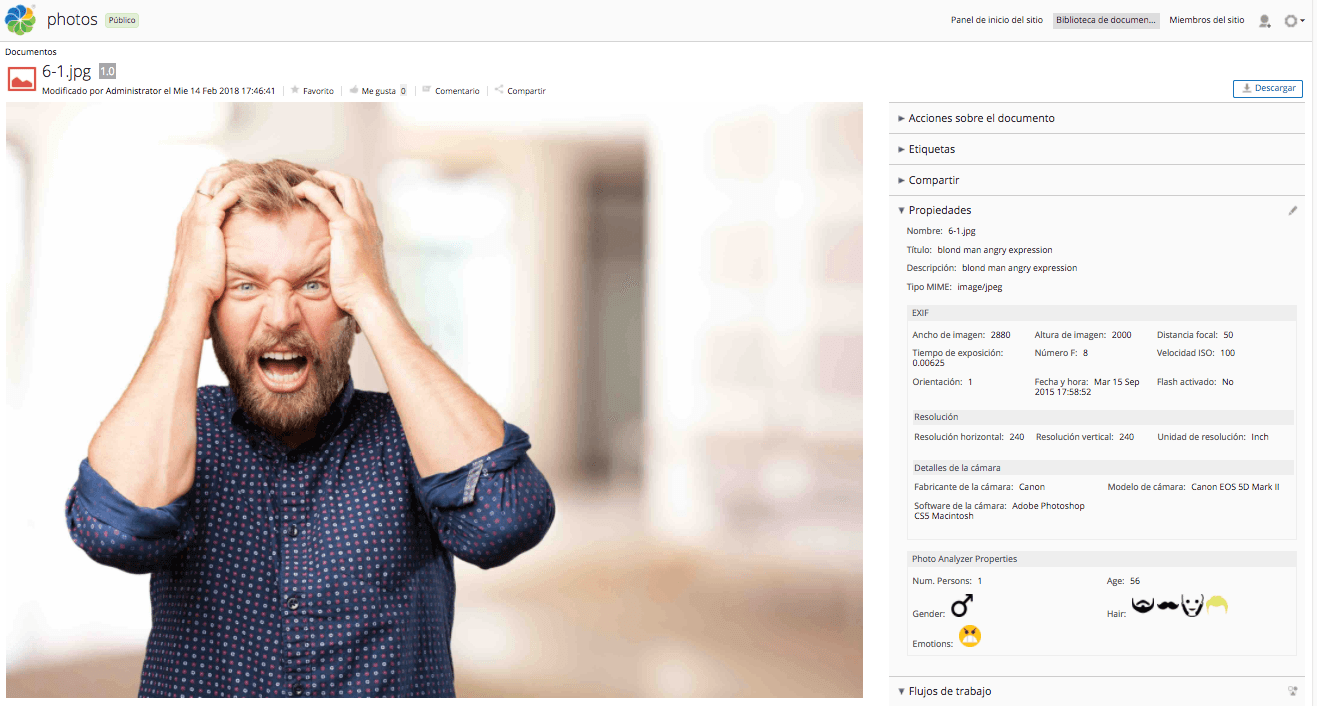

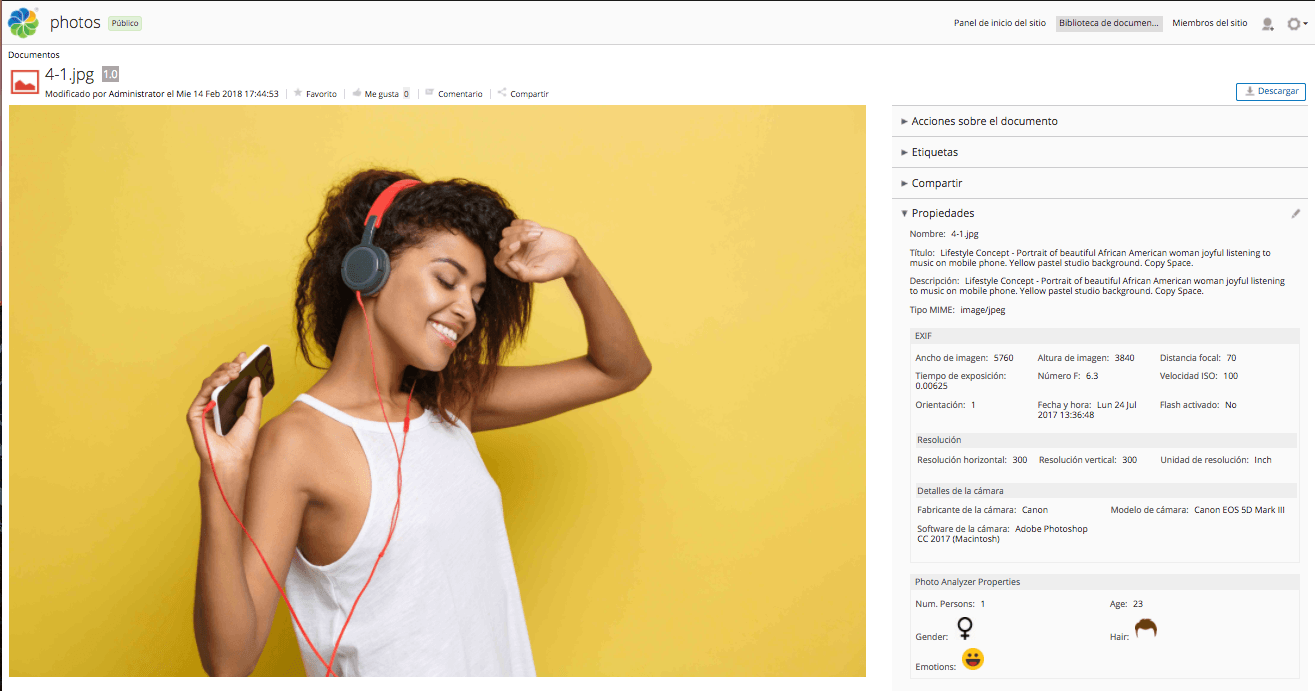

And some real examples:

For more details about the user experience, you can check the Youtube Demo.

How to configure it

For this module we integrated Microsoft Azure with Alfresco 5.2 community version.

To configure the Azure API connection, change the following properties in the alfresco-global.properties file.

// Depending on the geographical zone that you are

photo_analyzer.azure.api.url=https://westeurope.api.cognitive.microsoft.com/face/v1.0/detect

// List of attributes that we want to analyze in the response

photo_analyzer.azure.api.attribute_list=age,gender,emotion,hair,facialHair,accessories,glasses

// Subscription key trial(*) https://azure.microsoft.com/es-es/try/cognitive-services/

photo_analyzer.azure.api.subscription_key=<subsription-key>`

(*) Limitations of Trial subscription key: 30.000 transactions, 20 per minute.

A bit of code...

And about technical things, to extract all the information from the photos, we created a behavior that was executed when a new photo is uploaded/created in the repository. The execution of this behaviour was based on the integration with Microsoft Cognitive Services, so we needed to make a request and collected a response from the external service.

The following method is for construct the request to Azure:

public HttpPost prepareRequest(File file) throws URISyntaxException {

URIBuilder builder = new URIBuilder(Constants.getPropertyValue(Constants.AZURE_FACE_API_URL));

builder.setParameter("returnFaceId", "true");

builder.setParameter("returnFaceRectangle", "true");

builder.setParameter("returnFaceAttributes", Constants.getPropertyValue(Constants.FACE_ATTRIBUTES_LIST_TO_EXTRACT));

URI uri = builder.build();

HttpPost request = new HttpPost(uri);

request.setHeader("Content-Type", Constants.APPLICATION_OCTET_STREAM);

request.setHeader("Ocp-Apim-Subscription-Key", Constants.getPropertyValue(Constants.API_SUBSCRIPTION_KEY));

FileEntity reqEntity = new FileEntity(file, ContentType.APPLICATION_OCTET_STREAM);

request.setEntity(reqEntity);

return request;

}

Then we only need to analyze the JSON response and match with each metadata field of our Alfresco model.

[

{

"faceId": "c5c24a82-6845-4031-9d5d-978df9175426",

"faceRectangle": {

"width": 78,

"height": 78,

"left": 394,

"top": 54

},

"faceLandmarks": {

"pupilLeft": {

"x": 412.7,

"y": 78.4

},

"pupilRight": {...},

"noseTip": {...},

"mouthLeft": {...},

...

"underLipBottom": {...}

},

"faceAttributes": {

"age": 71.0,

"gender": "male",

"smile": 0.88,

"facialHair": {

"moustache": 0.8,

"beard": 0.1,

"sideburns": 0.02

},

"glasses": "sunglasses",

"headPose": {

"roll": 2.1,

"yaw": 3,

"pitch": 0

},

"emotion":{

"anger": 0.575,

"contempt": 0,

"disgust": 0.006,

"fear": 0.008,

"happiness": 0.394,

"neutral": 0.013,

"sadness": 0,

"surprise": 0.004

},

"hair": {

"bald": 0.0,

"invisible": false,

"hairColor": [

{"color": "brown", "confidence": 1.0},

{"color": "blond", "confidence": 0.88},

{"color": "black", "confidence": 0.48},

{"color": "other", "confidence": 0.11},

{"color": "gray", "confidence": 0.07},

{"color": "red", "confidence": 0.03}

]

},

"makeup": {

"eyeMakeup": true,

"lipMakeup": false

},

"occlusion": {

"foreheadOccluded": false,

"eyeOccluded": false,

"mouthOccluded": false

},

"accessories": [

{"type": "headWear", "confidence": 0.99},

{"type": "glasses", "confidence": 1.0},

],

"blur": {

"blurLevel":"Medium",

"value":0.51

},

"exposure": {

"exposureLevel":"GoodExposure",

"value":0.55

},

"noise": {

"noiseLevel":"Low",

"value":0.12

}

}

}

]

Conclusions and references

In this blog post you saw how Alfresco Photo Analyzer Module works, integrating Alfresco Content Services and the Microsoft Cognitive Services. I attach you a list of useful links to find more material about this topic:

- Face API: Facial Recognition | Microsoft Azure

- Emotion API: Emotions Detector | Microsoft Azure

- Documentación de Microsoft Azure | Microsoft Docs

- Azure Code Samples | Microsoft Azure

And some links about the project:

- GitHub: https://github.com/davidantonlou/alfrescoPhotoAnalyzer

- Youtube Demo: http://youtu.be/TZdIdTk6D3U?t=20m32s

Other related projects using Google API: