Let's spin up Confluent Kafka with RBAC in KRaft mode using Ansible

Kafka KRaft mode has a status of being production ready for quite some time. Lot of companies moved to this zookeeper-less Kafka already. These companies not always leverage container orchestrator tools and prefer e.g. fleet of VMs managed with Ansible. In this blog entry we look into setting up Kafka in KRaft mode using Ansible with Confluent's RBAC (Role based Access Control) enabled.

Setting up the stage

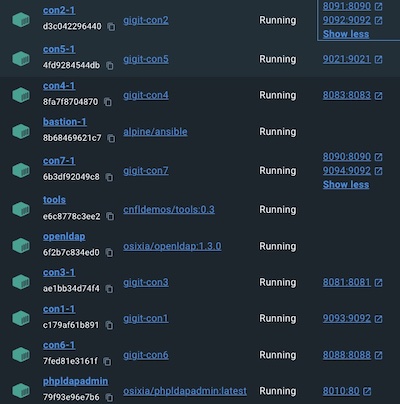

We are going to play with Confluent's Ansible playbooks, Confluent's Kafka distribution v7.7.2 with commercial RBAC functionality and we start with containers spun up with docker.

Configuration overview

We use docker-compose. First we need an implementation of LDAP, where users and groups are going to be stored.

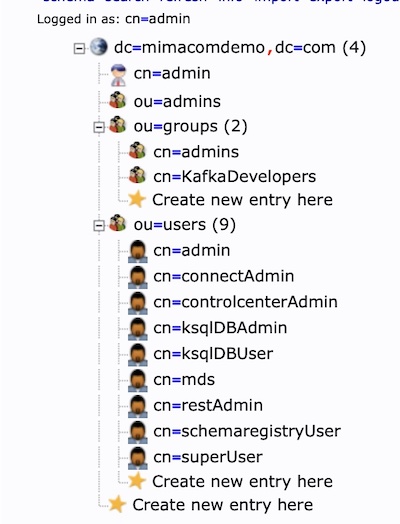

We've chosen to use OpenLDAP, and just in case you would like to take a look at domain tree we provision LDAP UI (phpldapadmin) too.

This is the corresponding ldap-part of docker-compose

openldap:

networks:

- mima-net-rbac

image: osixia/openldap:1.3.0

hostname: openldap

container_name: openldap

environment:

LDAP_ORGANISATION: "mimacomDemo"

LDAP_DOMAIN: "mimacomdemo.com"

LDAP_BASE_DN: "dc=mimacomdemo,dc=com"

volumes:

- ./scripts/security/ldap_users:/container/service/slapd/assets/config/bootstrap/ldif/custom

command: "--copy-service --loglevel debug"

phpldapadmin:

image: osixia/phpldapadmin:latest

container_name: phpldapadmin

hostname: phpldapadmin

ports:

- "8010:80"

environment:

- PHPLDAPADMIN_LDAP_HOSTS=openldap

- PHPLDAPADMIN_HTTPS=false

depends_on:

- openldap

networks:

- mima-net-rbac

where ldap users' definitions are provided in ./scripts/security/ldap_users, LDAP UI is going to be available locally

http://localhost:8010, and base domain name is dc=mimacomdemo, dc=com.

We need some tools as well. These we make available through Confluent's tools container

tools:

image: cnfldemos/tools:0.3

hostname: tools

container_name: tools

entrypoint: /bin/bash

tty: true

networks:

- mima-net-rbac

Next we define a container where the Ansible magic happens

bastion:

image: alpine/ansible

entrypoint: /bin/bash

networks:

- mima-net-rbac

tty: true

volumes:

- ./:/etc/data

and volume mapping lets us use hosts.yml (showed below) and ansible.cfg files from within bastion container.

Finally we provision few ssh-enabled containers, where Kafka components will reside. We did provide port mapping on them. Below you can see one of these container definitions.

...

con1:

networks:

- mima-net-rbac

tty: true

build: .

expose:

- "22"

ports:

- "9093:9092"

privileged: true

entrypoint: ["/usr/sbin/init"]

...

We have 7 fo those con<X> containers and they differ at most by the optional port mapping. These containers are built using

simplistic Dockerfile

FROM ubuntu:20.04

RUN apt-get update && apt-get install -y openssh-server && apt-get install -y python3

RUN mkdir /var/run/sshd && mkdir /var/dataa

RUN echo 'root:root123' | chpasswd

RUN sed -i 's/#PermitRootLogin prohibit-password/PermitRootLogin yes/' /etc/ssh/sshd_config

RUN useradd -m nonroot && echo "nonroot:nonroot" | chpasswd && adduser nonroot sudo

EXPOSE 22

CMD ["/usr/sbin/sshd", "-D"]

As You can see we choose ubuntu:20, which is one of the versions officially supported by Confluent.

Ansible's host.yml

As already mentioned we use official Confluent's Ansible collection and define an inventory, which lets us spin up following architecture

kafka controller: con1

kafka broker 1 : con2

kafka broker 2 : con7

schema registry : con3

kafka connect : con4

control center : con5

ksql : con6

Provisioning of containers

In the main directory of the project we run docker-compose up -d

With that we have bare containers prepared for Ansible playbooks and openLDAP container already provisioned. OpenLDAP is

accessible through your browser under http://localhost:8010. Once you visit this site, you can log in using

cn=admin,dc=mimacomdemo,dc=com as login and admin as password.

You will then be presented with the users and groups created, based on ldif available in ./scripts/security/ldap_users/ directory.

We use these users in Ansible's inventory file.

Ansible automation

Having spun up the containers we now issue Ansible commands in the bastion container. For that we docker-compose exec -w /etc/data bastion bash

into this container and install Confluent's collection

ansible-galaxy collection install confluent.platform

after it's done lets check whether we can successfully ping and validate hosts

ansible -i hosts.yml all -m ping

ansible-playbook -i hosts.yml confluent.platform.validate_hosts

Let's now take a closer look at our inventory hosts.yml. First of all this how the component mapping onto hosts looks like

kafka_controller:

hosts:

con1:

vars:

kafka_controller_log_dir: "{{general_log_path}}"

schema_registry:

hosts:

con3:

vars:

schema_registry_log_dir: "{{general_log_path}}"

kafka_connect:

hosts:

con4:

vars:

kafka_connect_log_dir: "{{general_log_path}}"

control_center:

hosts:

con5:

vars:

control_center_log_dir: "{{general_log_path}}"

control_center_default_internal_replication_factor: 1

ksql:

hosts:

con6:

vars:

ksql_log_dir: "{{general_log_path}}"

confluent.support.metrics.enable: false

kafka_broker:

hosts:

con2:

con7:

vars:

...

pretty simple, isn't it? All properties defined in vars above are optional. You can see we plan to have to single Kafka controller,

two brokers in separate roles. As of now, Confluent's Ansible collection does not allow to run Kafka cluster with brokers in shared mode.

Let's move to the part of hosts.yml where RBAC-dedicated configuration is defined:

kafka_broker:

hosts:

con2:

con7:

vars:

kafka_broker_log_dir: "{{general_log_path}}"

kafka_broker_custom_listeners:

service_listener:

name: SERVICE

port: 9093

ssl_enabled: false

ssl_mutual_auth_enabled: false

sasl_protocol: plain

all:

vars:

...

sasl_protocol: plain

rbac_enabled: true

mds_retries: 5

mds_super_user: mds

mds_super_user_password: mds

# set of component-related ldap users

kafka_broker_ldap_user: admin

kafka_broker_ldap_password: admin

control_center_ldap_user: controlcenterAdmin

control_center_ldap_password: controlcenterAdmin

where apart from additional listener definition in broker part, there are mds specific properties listed, including mds's

super-user credentials and mandatory rbac_enabled switched to true. And finally the crucial LDAP connection part

kafka_broker_custom_properties:

log.dirs: /var/dataa

listener.name.service.plain.sasl.jaas.config: org.apache.kafka.common.security.plain.PlainLoginModule required;

listener.name.service.plain.sasl.server.callback.handler.class: io.confluent.security.auth.provider.ldap.LdapAuthenticateCallbackHandler

ldap.java.naming.factory.initial: com.sun.jndi.ldap.LdapCtxFactory

ldap.com.sun.jndi.ldap.read.timeout: 3000

ldap.java.naming.provider.url: ldap://openldap:389

ldap.java.naming.security.principal: cn=admin,dc=mimacomdemo,dc=com

ldap.java.naming.security.credentials: admin

ldap.java.naming.security.authentication: simple

ldap.user.search.base: ou=users,dc=mimacomdemo,dc=com

ldap.group.search.base: ou=groups,dc=mimacomdemo,dc=com

ldap.group.search.mode: GROUPS

ldap.user.name.attribute: uid

ldap.user.memberof.attribute.pattern: cn=(.*),ou=users,dc=mimacomdemo,dc=com

ldap.group.name.attribute: cn

ldap.group.member.attribute.pattern: memberUid

ldap.user.object.class: inetOrgPerson

in which you can see the previously defined service listener to be configured with ldap callback handler; set of ldap

prefixed properties defining how to connect to, in this case local, ldap instance, and another set of properties e.g. letting

Confluent's RBAC extract account data. In the above example you see dc=mimacomdemo,dc=com used as a search base

which is exactly what we've used while setting up OpenLDAP locally.

Now it's time to run the playbook. In bastion container run ansible-playbook -i hosts.yml confluent.platform.all command.

After it's finished, it may take a while, we can proceed to define role-bindings from within tools container: docker-compose exec tools bash.

RBAC

First, lets confirm the controller and broker are active. Issue this command:

curl -u mds:mds http://con2:8090/security/1.0/activenodes/http | jq

you should see the following

[

"http://con7:8090",

"http://con2:8090"

]

and then confirm you get the proper response to these commands:

curl -u mds:mds http://con2:8090/security/1.0/roles

curl -u mds:mds http://con2:8090/security/1.0/roleNames | jq

the latter one should produce:

[

"AuditAdmin",

"ClusterAdmin",

"DeveloperManage",

"DeveloperRead",

"DeveloperWrite",

"Operator",

"ResourceOwner",

"SecurityAdmin",

"SystemAdmin",

"UserAdmin"

]

Now it's time to bind users we have stored in ldap with the roles listed above. Still within tools container

// log in

confluent login --url con2:8090

// provide credentials when asked, e.g. admin: admin and learn the ids of kafka-, ksql-, schema-, connect-cluster

confluent cluster describe --url http://con6:8088

confluent cluster describe --url http://con3:8081

confluent cluster describe --url http://con4:8083

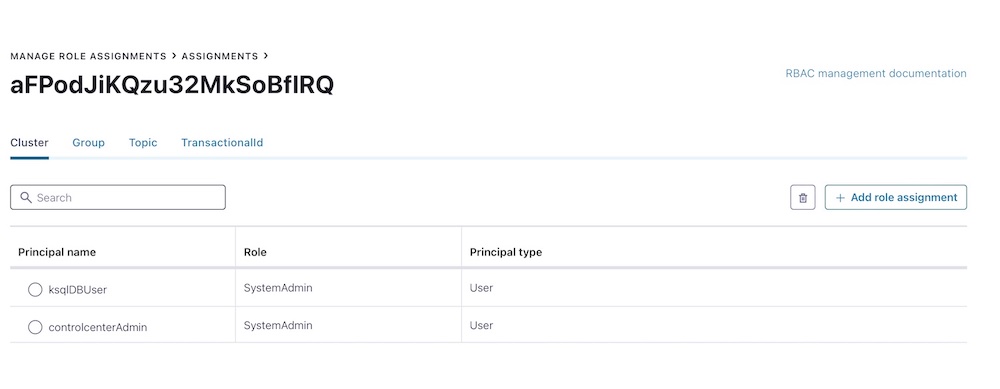

We can see what users are assigned given role e.g.

confluent iam rbac role-binding list --kafka-cluster-id <cluster_id> --role SystemAdmin

where <cluster_id> is the id we got earlier. The response confirms only controlcenterAdminuser is a SystemAdmin.

Principal

---------------------------

User:controlcenterAdmin

Finally, lets make a user ksqlDBUser available in ldap, a SystemAdmin as well:

confluent iam rbac role-binding create --principal User:ksqlDBUser --kafka-cluster-id <cluster_id> --role SystemAdmin

Now if we issue the confluent iam rbac role-binding list command from above, we get two users in the response.

We could have changed the scope as well and provide additionally --ksql-cluster-id or connect-cluster-id or

schema-registry-cluster-id with the value obtained with on eof the commands listed above.

We can confirm the role assignments in CC (go to http://localhost:9021 in your browser) and over to Manage role assignments tab.

This concludes the KRaft& RBAC& Ansible tutorial. The code is accessible on Pawel's Github.