Handling Pessimistic Locking with JPA on Oracle, MySQL, PostgreSQL, Apache Derby and H2

One of the key properties for correct pessimistic locking handling and testing is LockTimeout. Unfortunately its JPA support by different RDBMS providers is far from complete. In this article I will show you how you may overcome this limitation on Oracle, MySQL, PostgreSQL, Apache Derby and H2. Moreover, I will give you some suggestions about when pessimistic locking might be the correct solution and when not.

But before that, let's take a closer look at what pessimistic locking is all about.

Pessimistic Locking Explained

- If you expect to have many concurrent writers on the same row/-s in a relational database table...

- or if you need to implement some synchronization mechanism on database level like semaphores and so...

... the pessimistic locking could be the right choice!

🔔 Please note that this article is focused on exclusive row-level locks. If you are interested on other pessimistic locking aspects please check direct the JPA and RDBMS provider's documentation.

Before you decide to embrace the pessimistic locking solution you should first have an answer to the following questions:

- Could you avoid the concurrency for your concrete problem at all?

- Could you solve the concurrency problem with optimistic locking handling instead?

- How pessimistic locking works?

- What problems does pessimistic locking solve?

- What are the drawbacks of using pessimistic locking?

While concurrency avoidance is out of scope of this article, I will provide you some hints for the other 4 questions:

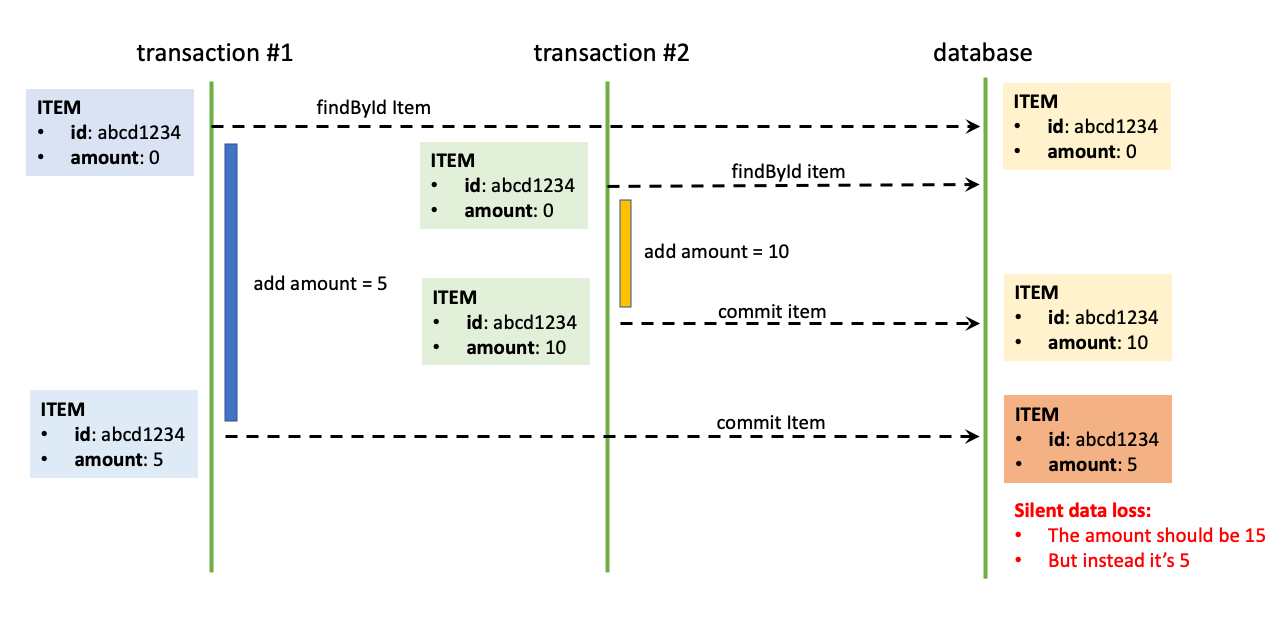

Concurrent Database Transactions Problem

In most RDBMS the default transaction isolation level is at least read committed. It solves dirty reads phenomena, which guarantees that the transaction reads only committed data by other transactions.

Because of the isolation level and of the concurrent nature of the transactions, the conflict could happen when two transactions have been processed simultaneously:

- Both of them are reading the same state of the record;

- Both of them are making different changes;

- One of them will commit earlier, persisting its changes to database;

- The second one will commit a bit later, silently overwriting the data persisted by the previous one.

🔔 In this case the optimistic locking handling would be a better solution because the concurrency is low.

But just for a demonstration purpose, I'll show you how you could solve it with a pessimistic locking solution:

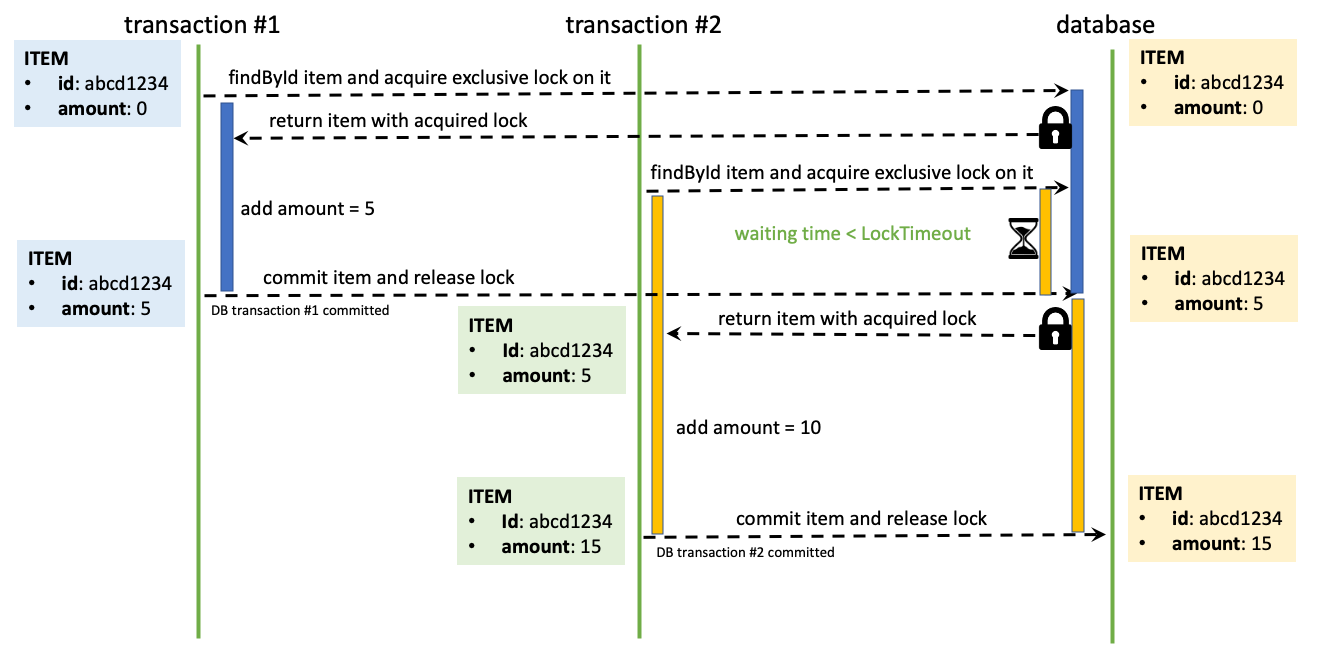

Exclusive Pessimistic Locking In Action

- One of the transactions obtains a row-level exclusive pessimistic lock;

- The other transaction wants to obtain the same lock as well, but has to wait till the release. It will happen either when the first transaction has been committed or when it has been roll-backed.

While the waiting time for acquiring the lock for the second transaction in this concrete case is lower than the defined LockTimeout, there is no exception thrown.

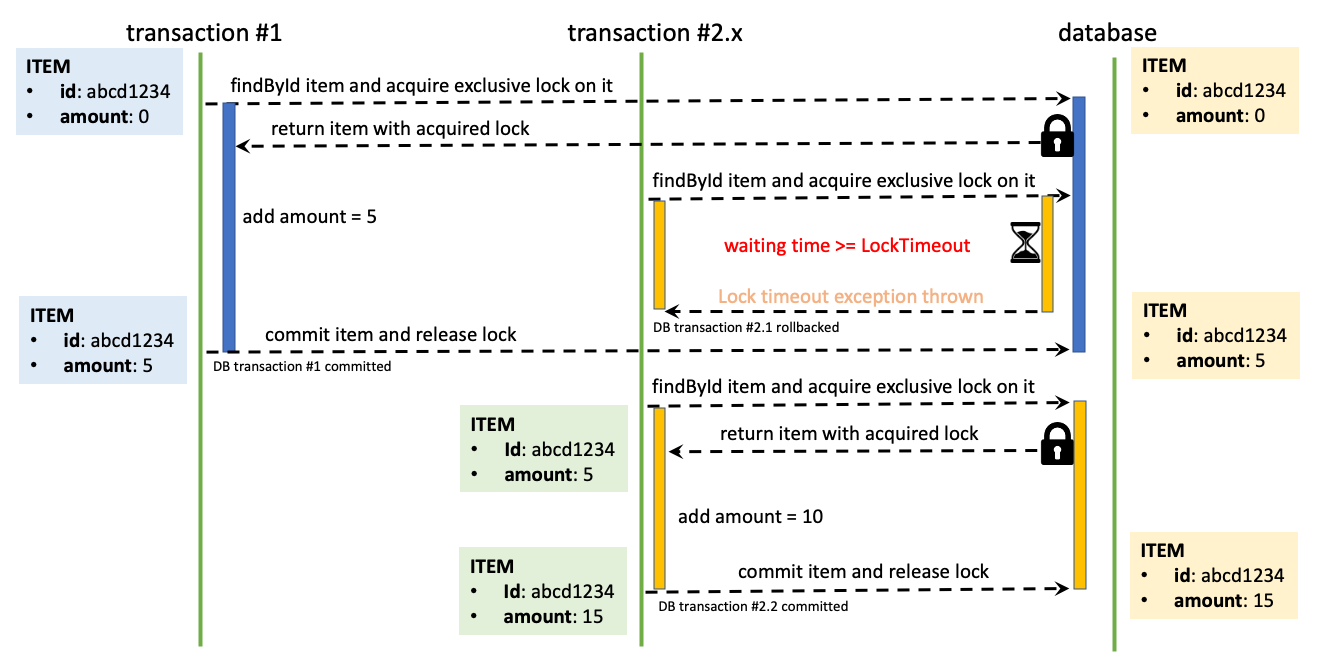

What Happens When The LockTimeout's Boundary Has Been Reached?

- If a transaction waits longer than LockTimeout for acquiring the exclusive lock, then a LockTimeoutException will be thrown without marking the transaction as rolled backed;

- If you use Spring Framework and you don't handle the exception inside the transaction then automatically the transaction will be rolled backed, though.

- If there is some retry mechanism in place, it could try to obtain the lock again.

🔔 Handling a LockTimeoutException through internal retry might not be the most adequate mechanism. If the LockTimeout was not enough, instead of catching the exception and retrying to obtain it, it could make more sense just to double the duration of LockTimeout.

Now that I showed you how pessimistic locking works, let's find some example where pessimistic locking is actually a better solution than optimistic locking.

High-Level Writing Concurrency Problem

- What if the expected behaviour of the system is to have many concurrent writers on the same row in database?

- How to guarantee the integrity of data?

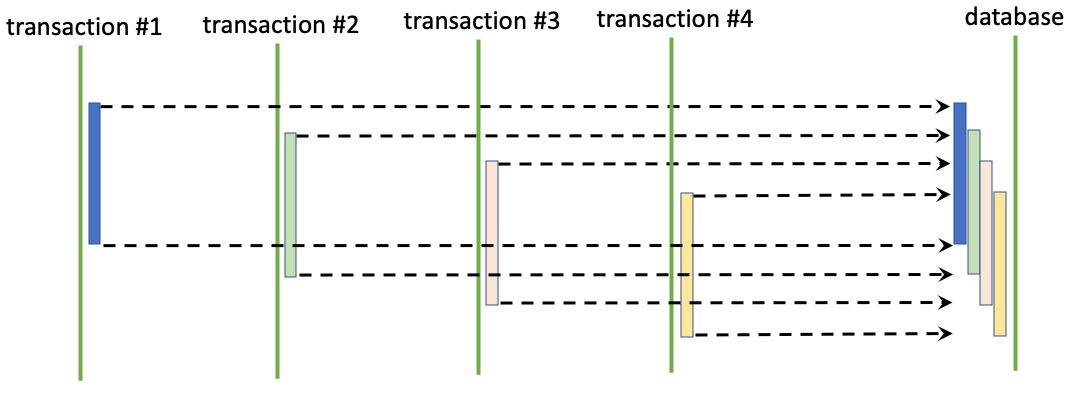

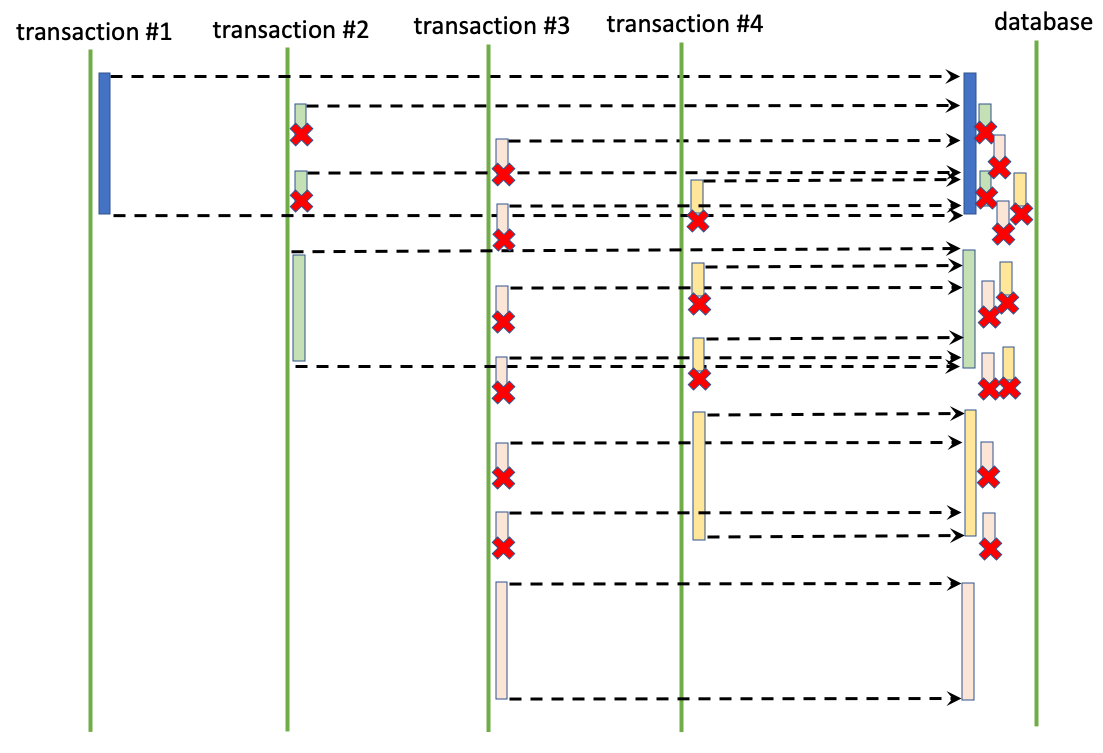

I will show you what could happen if you implement optimistic locking handling with 4 concurrent transactions:

Optimistic Locking For Solving High-Level Writing Concurrency Problem

- One of the transactions will succeed to commit;

- Other three transactions will generate one or more optimistic locking exceptions;

- If there is retry mechanism, then the other three will retry till success;

- As you see in this simplified example with only 4 one-time incoming concurrent transactions, there were 4 commits and 11 rollbacks.

I will show you very roughly how you could calculate the minimal baseline of the amount of retries needed for handling optimistic locking:

| concurrency level | minimum retries for transaction | minimum total retries |

|---|---|---|

| 1 | 0 | 0 |

| 2 | 0 + 1 | 1 |

| 3 | 0 + 1 + 2 | 3 |

| 4 | 0 + 1 + 2 + 3 | 6 |

| 5 | 0 + 1 + 2 + 3 + 4 | 10 |

From these numbers, the following formula can be empirically extracted:

minTotalRetries(concurrencyLevel) = F(x) = x * (x-1)/2

- In the sequence diagram I showed you an example where with 4 concurrent transactions there were 11 rollbacks. They hardly could be less than 6. That's why I defined 6 as a minimum baseline.

- Based on this formula if you have 20 concurrent transactions they will generate a minimum of 190 rollbacks;

🔔 Well, as you see with so many rollbacks by high level of concurrency, it doesn't make much sense to use optimistic locking handling.

Will pessimistic locking come to the rescue?

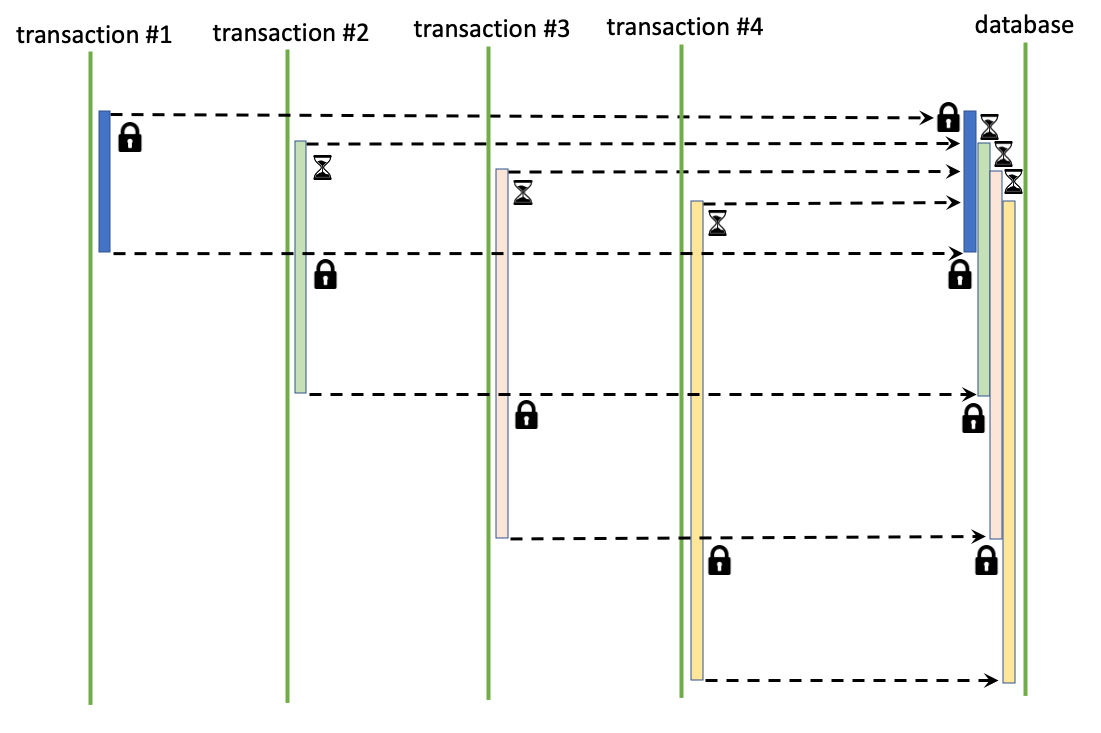

Exclusive Pessimistic Locking For Solving High-Level Writing Concurrency Problem

- Good news: There are only 4 transactions and all of them will commit;

- Bad news: All transactions, which are blocked awaiting to acquire the lock, will be not available for other application threads.

🔔 Some transactions will reach the "LockTimeout's" boundary, will generate LockTimeoutException and will require handling mechanism. This could sound like extra development effort, but it could save your system from exhausted resources, especially if you have lower LockTimeout and a good pessimistic locking handling strategy!

How Long Should The LockTimeout Be?

- It depends on your system's requirements, but if LockTimeout is longer than 10 seconds, maybe it's too long;

- Try to visualize how fast your system will exhaust thread connection pool resources in case of single long-running queries with an exclusive row-level lock in high-level concurrency on it;

- And the idea of obtaining back control on your transactions without killing them manually in the server should help you decide how long LockTimeout should be.

Why Is It Not A Good Idea To Implement Pessimistic Locking With NOWAIT?

- I have seen some pessimistic locking solutions where the LockTimeout is set to minimum. In that case we are in so called "NOWAIT" scenario;

- In my opinion, this is a misuse of the tool pessimistic locking;

🔔 If you don't use the "blocking feature" of pessimistic locking, then most probably you could solve the same problem with optimistic locking handling.

What Could Be A Good Way To Handle Lock Timeout Exception?

Let me first explain why I would not suggest using internal retry mechanism:

-

If you want to apply a retry as LockTimeoutException handling strategy after some idle time like:

- try to acquire lock with LockTimeout set to 3 sec;

- handle LockTimeoutException and wait idle for 3 sec;

- then retry to acquire lock with LockTimeout set to 3 sec...

...then the application thread and its associated transaction (if any) will remain blocked (in this case for 9 sec) and will not be available to serve other incoming requests;

-

Instead of implementing a retry with delay, it would be much simpler and with similar efficiency just to increase the LockTimeout (to 9 sec in this case);

🔔 On the other hand, an external mechanism for handling LockTimeoutExceptions with retries could be a valuable investment for your architecture: For example a dedicated error handling microservice.

What About Deadlocks?

- Bad news: The use of exclusive pessimistic lock can increase the likelihood of deadlocks, wherein two (or more) transactions each hold locks that the other wants. The risk increases when multiple exclusive locks have been acquired from the same transaction;

- Good news: Most RDBMS providers have deadlock detection mechanisms which identify deadlocks and release them for you;

- More good news: Sometimes a problem which requires acquiring of a double lock could be solved as two separate problems where only one lock at a time is acquired.

🔔 Don't forget to adjust deadlock timeout (the timeout after which the database checks for deadlocks): Their values should be in sync with LockTimeout!

Implementing Pessimistic Locking With Different Providers

Till now, I demonstrated to you the importance of LockTimeout for a correct implementation of exclusive pessimistic locking.

In the following paragraphs I will show you:

- What is the jpa support for a row-level exclusive pessimistic locking between different providers;

- What is the native support for LockTimeout;

- How to implement a pessimistic locking with JPA and some of the most popular RDBMS which you might be using in production: Oracle, MySQL and PostgreSQL;

- How to implement a pessimistic locking with JPA and in-memory databases which you might be using for integration tests: H2 and Apache Derby.

The Comparison Results Between Different Providers Have Been Achieved Through The Following Setup:

- Spring Boot 2.3.1.RELEASE

- spring-boot-starter-data-jpa

and:

| provider | maven groupId | maven artifactId:version | database server |

|---|---|---|---|

| Oracle | com.oracle.jdbc | ojdbc8:12.2.0.1 | oracle-xe-11g |

| MySQL | mysql | mysql-connector-java:8.0.20 | mysql:8.0.20 |

| PostgreSQL | org.postgresql | postgresql:42.2.14 | postgres:12.3 |

| H2 | com.h2database | h2:1.4.200 | in-memory |

| Apache Derby | org.apache.derby | derby:10.14.2.0 | in-memory |

🔔 I didn't find any complete and reliable information on the internet about LockTimeout and the jpa support of it by different vendors. That's why I decided to invest some time, to test all features first-hand and to summarize them bellow!

JPA Pessimistic Locking Support By Different Providers

| jpa feature | Oracle | MySQL | PostgreSQL | H2 | Apache Derby |

|---|---|---|---|---|---|

| LockModeType.PESSIMISTIC_WRITE | x | x | x | x | x |

| javax.persistence.lock.timeout | x | ||||

| javax.persistence.lock.timeout=0 (nowait) | x | x | |||

| handling of LockTimeoutException | x | x | x | x |

As you see, the jpa support for LockTimeout by different providers listed in the table is not that great:

- Only Oracle has a full jpa support;

- PostgreSQL has a support for nowait only;

- H2 in-memory database interrupts the connection and does not allow you to handle the LockTimeoutException gracefully;

🔔 Note that if you use Spring Framework instead of LockTimeoutException you have to handle CannotAcquireLockException.

Native LockTimeout Support by Different Providers

| LockTimeout | Oracle | MySQL | PostgreSQL | H2 | Apache Derby |

|---|---|---|---|---|---|

| unit of measurement | sec | sec | ms | ms | sec |

| nowait (or similar) | 0 | 0 | 1 | 1 | 0 |

| min | 0 | 0 | 1 | 1 | 0 |

| max | 2.147.483 | oo (no internal limit) | 2.147.483.647 | 2.147.483.647 | oo (no internal limit) |

| infinite wait | -1 | N/A | 0 | N/A | N/A |

| default | -1 | 50 | 0 | 4000 | 60 |

🔔 As you see in the table, not setting LockTimeout for a pessimistic locking could cause resource exhaustion for your production system: Some databases, like Oracle and PostgreSQL, have a LockTimeout of infinite!

🔔 As you notice there are many differences between different vendors: the meaning of -1 and 0, the different min values, the different measure units! Maybe this explains partially the reason why they don't have good jpa support for LockTimeout!

Setting LockTimeout With JPA & Oracle

- Set LockTimeout through JPA query in Spring Repository:

@Lock(LockModeType.PESSIMISTIC_WRITE)

@QueryHints({@QueryHint(name = "javax.persistence.lock.timeout", value = "3000")})

Optional<Item> getItemById(UUID id);

🔔 This jpa query will try to acquire a pessimistic lock with LockTimeout set to 3 seconds (3000 ms). For more information check Oracle documentation here and here.

🔔 As you see Oracle has great jpa support for setting LockTimeout. No native code needed!

🔔 If you need an example how to test pessimistic locking handling against Oracle take a look at this blog post!

Setting LockTimeout With JPA & MySQL

- Get LockTimeout:

select @@innodb_lock_wait_timeout

- Set LockTimeout:

set session innodb_lock_wait_timeout = 3

🔔 This native query takes effect for the current transaction only. You could now acquire a pessimistic lock through jpa query, and it will set LockTimeout to 3 seconds. For more information check MySQL documentation here.

🔔 This native query has a small side effect: It sets as well the default LockTimeout for subsequent transactions which are part of the current session. If you decide to use it do not rely anymore on default LockTimeout, but be sure to set LockTimeout for all queries which need to acquire a pessimistic lock!

🔔 If you need an example how to test pessimistic locking handling against MySql take a look at this blog post!

Setting LockTimeout With JPA & PostgreSQL

- Get LockTimeout:

show lock_timeout

- Set LockTimeout:

set local lock_timeout = 3000

🔔 This native query takes effect for the current transaction only. You could now acquire a pessimistic lock through jpa query, and it will set LockTimeout to 3 seconds (3000 ms). For more information check PostgreSQL documentation here or check here.

🔔 No side effects here!

🔔 If you need an example how to test pessimistic locking handling against PostgreSQL take a look at this blog post!

Setting LockTimeout With JPA & H2 (In-Memory Database)

- Set LockTimeout:

spring:

datasource:

url: jdbc:h2:mem:test;DB_CLOSE_DELAY=-1;LOCK_TIMEOUT=3000

🔔 Adding LOCK_TIMEOUT parameter to the database connection string will set the default LockTimeout to 3 seconds (3000 ms). In my experience there is no way to set it for a single transaction (or at least I was not able to find a way 😉)! For more information check H2Database documentation here.

🔔 According to the documentation to avoid closing database with the last connection you need to set DB_CLOSE_DELAY to -1. Unfortunately this will not solve the "Connection is closed" problem, which you obtain after LockTimeoutException and which make impossible to test your pessimistic locking handling strategy.

🔔 In this database the LockTimeout parameter is not very accurate. For example if you set it to 2000 ms (2 seconds), the actual LockTimeoutException comes in 4 seconds!

After this unfortunate experience with H2 database 😫, I decided to look for another in-memory database more suitable for a pessimistic locking integration testing...

...I tried HsqlDB as well, but the situation hadn't improved much...

...and I was about to give up when I finally found it...

📢 Okay, here it comes!

Setting LockTimeout With JPA & Apache Derby (In-Memory Database)

- Get LockTimeout:

VALUES SYSCS_UTIL.SYSCS_GET_DATABASE_PROPERTY('derby.locks.waitTimeout')

- Set LockTimeout:

CALL SYSCS_UTIL.SYSCS_SET_DATABASE_PROPERTY('derby.locks.waitTimeout', '3')

🔔 This native query will set the default LockTimeout to 3 seconds (3000 ms). For more information check Apache Derby documentation here.

🔔 Execute this native query at start-up only and within dedicate transaction. It will take effect in the subsequent transactions only.

🔔 As you can see Apache Derby is a first-class solution for a pessimistic locking handling integration testing!

Summary

- Pessimistic locking is a valuable tool for solving race conditions in high concurrency environment and for synchronization on database-level;

- Be aware of the pitfalls when you use an exclusive pessimistic locking in production;

- Invest resources in having robust LockTimeoutException handling strategy;

- Cover your pessimistic locking handling with integration tests;

- Try to implement the concurrency solutions as resilient as possible;

- And last, but not least: You be the master of handling race conditions and high load, not the other way around! 😃