Binary Inputs for Jenkins Builds on OpenShift

Diving into the OpenShift Container Platform (OCP) is a challenge. One primary objective of our client was to host a Micro-Services Architecture (Spring Boot) on a Micro-Services Platform. Therefore OpenShift was acquired.

OpenShift provides with Jenkins CI an out of the box solution for a build infrastructure. Beginning to build your own software on OCP comes with multiple challenges. A lot of base knowledge for OpenShift (Docker and Kubernetes) and Jenkins is needed. That might be a little bit overwhelming. Especially finding a clear path is difficult under the vast documentation.

This blog post sheds some light on how to build software artefacts (Spring Boot executable JAR/WAR) with Jenkins and bundle them with Docker containers. OpenShift provides multiple build configurations.

For us, it is essential to distinguish between two types of builds.

- The Jenkins Pipeline build as a Jenkinsfile scripted in Groovy. We named it the Artifact build.

- The Docker Image build.

Bear in mind; we do an example with lots of variables. Our concern is to give you an understanding of the problem and our solution. You can use that as a jump start to solve or implement your builds with OpenShift and Jenkins. Our example used a persistent Jenkins instance on OpenShift.

Beginning with OpenShift Builds

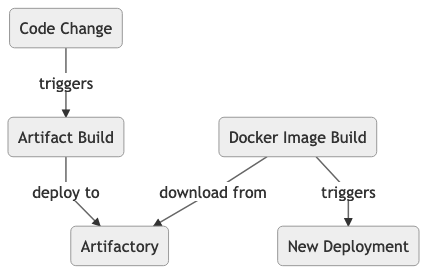

In our first approach, we built the Artifact and deployed it to JFrog Artifactory as a cloud service. In the Docker build on OpenShift we queried Artifactory and retrieved the download URL for the Artifact.

This approach has disadvantages:

- The builds were unrelated and disconnected.

- The Docker build had to retrieve the Artifact. It requires extra logic that retrieved only the latest built Artifact.

- The retrieval logic was scripted in the container.

- This approach is error-prone since the Artifact must not match the previous build.

Following Dockerfile was used for the docker image build.

FROM redhat-openjdk-18/openjdk18-openshift

ENV ARTIFACTORY_SERVER="https://demo.jfrog.io/repo" GROUP_ID="com.mimacom.ocp" ARTIFACT_ID="demo-app"

ARG USERNAME

ARG PASSWORD

ARG ARTIFACT_VERSION="1074.1-SNAPSHOT"

ARG JAVA_OPTS="-Xms384m -Xmx384m"

USER root

RUN yum install wget -y && yum clean all -y

RUN wget -O /usr/bin/jq https://github.com/stedolan/jq/releases/download/jq-1.5/jq-linux64 && chmod a+rx /usr/bin/jq

RUN mkdir -p /srv/app/

RUN curl "$ARTIFACTORY_SERVER/api/search/gavc?g=$GROUP_ID&a=$ARTIFACT_ID&v=$ARTIFACT_VERSION&repos=libs-snapshots-local" -s > output.txt

RUN wget -O download.txt $(cat output.txt | /usr/bin/jq -r '.results[0].uri')

RUN wget -O /srv/app/micro-service.jar $(cat download.txt | /usr/bin/jq -r '.downloadUri')

WORKDIR /

ADD bootstrap.sh /

ADD logback-spring.xml /srv/app/

RUN chmod 755 /bootstrap.sh && chmod g=u /etc/passwd && chown -R 1001:0 /srv/app

EXPOSE 8080

# OpenShift requires images to run as non-root by default

USER 1001

ENTRYPOINT ["/bootstrap.sh"]

As you can see, there is much ugly logic scripted within the Dockerfile.

New Approach

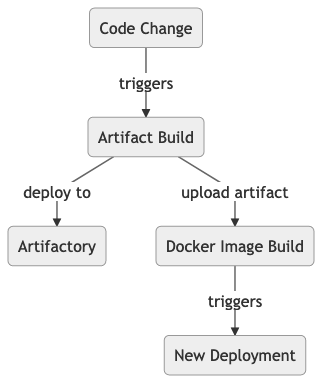

Our desires or requirements:

- We needed a one to one correlation of Artifact and Docker image build.

- We need to avoid the download of the Artifact.

- We have less internet traffic and can ensure the functionality of the Docker image build.

- We decouple the Artifactory deployment from the Docker Image build.

- A failed deploy to Artifactory does not impact the Docker Image build.

We need to pass the Artifact directly to the Docker build. Our new approach passes the artifact directly to the Docker build.

Solution

The solution is easy if you know all the possibilities.

- The Docker Build configuration can either take

gitorbinaryas source input. - Our first mistake was to use

gitas source input. - They are mutually exclusive.

- Instead of

git, we use now thebinaryinput.

See below the example configuration:

kind: BuildConfig

apiVersion: build.openshift.io/v1

metadata:

name: micro-service-docker

namespace: development

labels:

build: micro-service

app: micro-service

spec:

source:

binary: {}

output:

to:

kind: ImageStreamTag

name: micro-service:latest

namespace: development

postCommit: {}

resources: {}

strategy:

dockerStrategy:

dockerfilePath: ./openshift/Dockerfile

type: Docker

With that change we can now use in the Jenkinsfile:

stage('Docker Build in development namespace') {

steps {

sh '''

oc start-build -F $DOCKER_BUILD_NAME --from-dir . -n $DEV

'''

}

}

As an alternative, you could also use this scripted syntax as an alternative to the declarative syntax.

stage('Docker Build in dev') {

steps {

script {

openshift.withCluster() {

openshift.withProject("development") {

// bc = build configuration ...

def build = openshift.selector('bc', 'micro-service-docker').startBuild("--from-dir .")

build.logs('-f')

}

}

}

}

}

The above stage triggers the OpenShift Docker build. The Jenkins directory is zipped and uploaded to the pod that runs the docker build. In this pod, the archive contents are extracted. These example logs appear in the Jenkins output.

[logs:build/micro-service-docker-12] Receiving source from STDIN as archive ...

[logs:build/micro-service-docker-12] Step 1/15 : FROM redhat-openjdk-18/openjdk18-openshift

...

Instead of the previous complicated logic in the Dockerfile, you have now only one line. This line copies the built artifact into the Docker image.

COPY microservice-app/target/micro-service.jar /srv/app/micro-service.jar

After the successful build, you can trigger the deployment and verify it.

stage('Deploy to development') {

steps {

openshiftDeploy(

namespace: '$DEV',

depCfg: '$DEPLOYMENT_CONFIG',

waitTime: '300000'

)

openshiftVerifyDeployment(

namespace: '$DEV',

depCfg: '$DEPLOYMENT_CONFIG',

replicaCount: '1',

verifyReplicaCount: 'true',

waitTime: '300000')

}

}

You should see these logs in Jenkins:

[rollout:latest:deploymentconfig/micro-service] deploymentconfig.apps.openshift.io/micro-service rolled out

[rollout:status:deploymentconfig/micro-service] Waiting for rollout to finish: 0 out of 1 new replicas have been updated...

[rollout:status:deploymentconfig/micro-service] Waiting for rollout to finish: 0 out of 1 new replicas have been updated...

[rollout:status:deploymentconfig/micro-service] Waiting for rollout to finish: 1 old replicas are pending termination...

[rollout:status:deploymentconfig/micro-service] Waiting for rollout to finish: 1 old replicas are pending termination...

[rollout:status:deploymentconfig/micro-service] Waiting for latest deployment config spec to be observed by the controller loop...

[rollout:status:deploymentconfig/micro-service] replication controller "micro-service-12" successfully rolled out

Summary

The direct usage of the built Artifact is a much cleaner approach and ensures a tight coupling between artifact and docker image build with Jenkins. Everything is controlled now in the Jenkinsfile, so can you adjust the level of automation and deployment depth yourself.