Persistent Storage with Red Hat OpenShift on VMware

This article is a technical summary with my experience of the Red Hat OpenShift Container Platform (OCP). Starting with this article, I publish some stats, thoughts about the creative writing process. I got involved in a sophisticated storage problem with OpenShift. Under the hood, it is Kubernetes trying to allocate persistent storage from the VMware infrastructure. Understanding and troubleshooting the problems was a challenge.

Stats

In the stats section, you find information about the creative writing process and the problem domain.

- Estimated reading time: 21 minutes, 45 seconds

- 3261 words

- 783 lines

Wisdom:

What I cannot build, I do not understand. (Richard Feynman)

Stats for Geeks

Stats for Geeks is a fun section to illustrate that writing can be challenging but also fun if you are into technology and geek culture.

- OCP is also an abbreviation for Omni Consumer Products, the megacorporation from the movie RoboCop.

- OCP is an anagram for POC (proof of concept).

- On the International Women's Day, I start writing this article. In our company nothing special. We don't distinguish between female and male engineers.

Beverages

- Coffee: 5

- Tea: 2

- Beer: 1

DevOps songs:

- What Kind Of Man (The Odyssey – Chapter 1) by Florence + The Machine

- Third Eye (The Odyssey – Chapter 9) by Florence + The Machine

- No, No, No by Destiny's Child

Entertainment during writing:

- Hearthstone Battles: 1

- Super Mario Kart: 1

Time spent

- Time at work: 24 hours

- Invested Research Time: 6 hours (unpayed)

- Time writing: 10 hours (unpayed)

Technical Stats

The Server

# CPU Capacity 514,75 GHz

# Memory 2 TB

# Disk 23.4 TB

[root@master1 ~]# govc about

Name: VMware vCenter Server

Vendor: VMware, Inc.

Version: 6.5.0

Build: 8024368

OS type: linux-x64

API type: VirtualCenter

API version: 6.5

Product ID: vpx

UUID: 5b5960d9-eddf-41be-99fc-61577c06cedb

Linux, Ansible and Docker

[root@master1 ~]# cat /etc/redhat-release

Red Hat Enterprise Linux Server release 7.5 (Maipo)

[root@master1 ~]# ansible --version

ansible 2.6.6

[root@master1 ~]# docker -v

Docker version 1.13.1, build 8633870/1.13.1

OpenShift, Kubernetes

[root@master1 ~]# oc version

oc v3.11.16

kubernetes v1.11.0+d4cacc0

features: Basic-Auth GSSAPI Kerberos SPNEGO

Server https://master1:8443

openshift v3.11.16

kubernetes v1.11.0+d4cacc0

Products and Technology

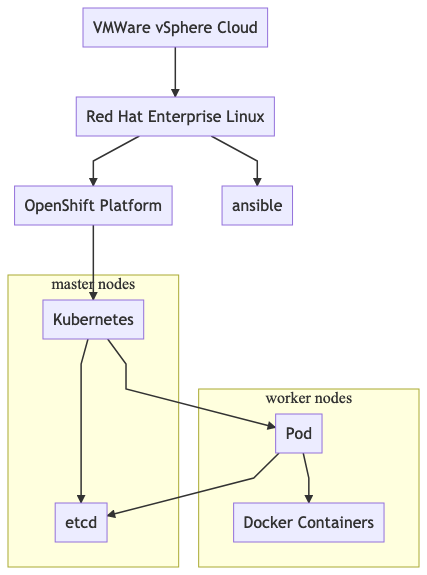

In a customer project, I am supporting the infrastructure team. A dedicated production server farm uses VMware to provides services for its customers. The OpenShift Container Platform runs on virtual machines to deploy Docker containers with Kubernetes. The production platform is highly available. The platform has three master nodes and multiple application nodes.

For our non-technical users a glossary for the beauty of distributed systems in a world full of containerised software.

TLDR; (too long don't read)

Bottom-up explanation:

- Docker is the software to run your desired software in containers

- Kubernetes orchestrates your containers to multiple nodes and ensures high availability with pods

- The cluster state is persisted in etcd

- OpenShift uses Kubernetes and Docker

- The OpenShift Platform is running on virtual machines, provided by the VMware vSphere solution

Docker Terminology

- Docker = computer program that performs operating-system-level virtualisation, run software packages in containers

- container = A Docker container image is a lightweight, standalone, executable package of software that includes everything needed to run an application: code, runtime, system tools, system libraries and settings.

Kubernetes Terminology

- Kubernetes (k8s) = open-source container orchestration from Google

- kubectl = command line tool for Kubernetes

- etcd = etcd is a distributed key-value store that provides a reliable way to store data across a cluster of machines.

- pod = A pod (as in a pod of whales or pea pod) is a group of one or more containers (such as Docker containers), with shared storage/network, and a specification for how to run the containers.

OpenShift Terminology

- Red Hat OpenShift Container Platform (OCP) = a production platform for launching Docker containers.

- oc = OpenShift CLI

VMware vSphere Terminology

- VMware vSphere (vsphere) = a cloud computing virtualization platform.

- Virtual Machine = an emulation of a computer system

- govc = vsphere command line interface written in Go

- vmdk = virtual machine disk volume

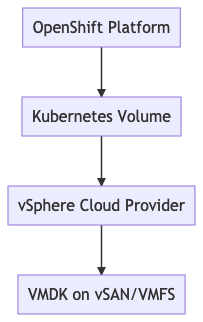

Storage Architecture

Find below a brief overview of the storage architecture in the OpenShift Container Platform.

About Storage

Containers are stateless and ephemeral, but applications are stateful and need persistent storage. Data infrastructure software like PostgreSQL, Elasticsearch or Apache Kafka must store their data on persistent storage volumes; otherwise, we lose stored data after every restart, relocation or redistribution. Kubernetes manages storage in OpenShift. You can either invoke the OpenShift command line (oc) our use the Kubernetes command line (kubectl) to manage the underlying storage infrastructure.

About Storage High Availability

High availability of storage in the infrastructure is the responsibility of the underlying storage provider. The VMware vSphere platform provides several solutions for that. VMware adds this persistent storage support to Kubernetes through a plugin called vSphere Cloud Provider. Kubernetes provides many possibilities. See below an overview for VMware vSphere.

© VMware vSphere Provider

© VMware vSphere Provider

Storage Objects

We are dealing with the following terms:

- volume = a logical volume

- storage class = describe the class or quality of storage

- pv = persistent volume

- pvc = persistent volume claim

- vmdk = virtual disk of VMware

Volume

- A Kubernetes volume is different than a Docker volume!

- In Docker, a volume is merely a directory on disk or in another Container.

- The Kubernetes volume is an additional abstraction that outlives the containers in a running pod.

- Kubernetes has a vast selection of volume types.

- The volume of interest is the vsphereVolume

Storage Class

A StorageClass provides a way for administrators to describe the «classes» of storage they offer. Different classes might map to quality-of-service levels, or backup policies, or arbitrary policies determined by the cluster administrators. Kubernetes itself is unopinionated about what classes represent. This concept is sometimes called «profiles» in other storage systems.

Persistent Volume (PV)

In short:

- admin (cluster administrators) uses pv to provide persistent storage for a cluster

- shared across the cluster (project unspecific)

- a PV can only be bound to one PVC

- PVs are resources in the cluster

Persistent Volume Claim (PVC)

In short:

- developer (OpenShift user) can claim space from a persistent volume

- specific to a project

- PVCs are requests for PVs and also act as claim checks to the resources

- claiming more storage than the PV provides, results in failure

VMDK

Since we are dealing with virtual disk, VMware provides several disk types:

- zeroedthick (default) – Space required for the virtual disk is allocated during creation.

- eagerzeroedthick – Space required for the virtual disk is allocated at creation time. In contrast to zeroedthick format, the data remaining on the physical device is zeroed out during creation. It takes the longest time of all disk types.

- thick – Space required for the virtual disk is allocated during creation.

- thin – Space required for the virtual disk is not allocated during creation, but is supplied, zeroed out, on demand at a later time.

Configure VMware vSphere for OpenShift

Ensure that your vcenter-user has all necessary permissions! Otherwise, you get for instance this kind of error messages:

[root@master1 ~]# oc describe pvc

Name: pvc0001

...

Warning ProvisioningFailed persistentvolume-controller

Failed to provision volume with StorageClass "vsphere-standard":

ServerFaultCode: Cannot complete login due to an incorrect user name or password.

We use govc to manage our virtual machines.

govc

To setup govc, I use these setup instructions.

curl -LO https://github.com/vmware/govmomi/releases/download/v0.20.0/govc_linux_amd64.gz

gunzip govc_linux_amd64.gz

chmod +x govc_linux_amd64

cp govc_linux_amd64 /usr/bin/govc

For convenience in the later operations, we set up some default values. You can override them by passing the values in the command line interface.

# your REST API vSphere server

export GOVC_URL='vsphere.mydomain.ch'

# user with extended privileges

export GOVC_USERNAME='vcenter-user'

export GOVC_PASSWORD='vcenter-password'

# default data-center

export GOVC_DATACENTER='dc3'

# default data-store

export GOVC_DATASTORE='ESX_OCP'

# self-signed certificate

export GOVC_INSECURE=1

VMware Tools

Enabling VMware vSphere requires installing the VMware Tools on each Node VM. We check the installation.

[root@master1 ~]# yum search "VMware"

open-vm-tools.x86_64 : Open Virtual Machine Tools for virtual machines hosted on VMware

Check for package name

[root@master1 ~]# yum list installed open-vm-tools

Installed Packages

open-vm-tools.x86_64 10.1.10-3.el7_5.1 @rhel-7-server-rpms

The is a minor difference between the commercial VMware Tools and Open VM Tools extension. For server usage, the open-vm-tools are sufficient.

Enable UUID

Set the disk.EnableUUID parameter to true for each Node VM. This setting ensures that the VMware vSphere’s Virtual Machine Disk (VMDK) always presents a consistent UUID to the VM, allowing the disk to be mounted properly.

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Master Nodes/master1"

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Master Nodes/master2"

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Master Nodes/master3"

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Application Nodes/worker1"

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Application Nodes/worker2"

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Application Nodes/worker3"

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Application Nodes/worker4"

govc vm.change -e="disk.enableUUID=true" -vm="/dc3/vm/OpenShift/Application Nodes/worker5"

To reboot the cluster do for each node a reboot. This example reboots master node 3.

[root@master1 ~]# govc vm.power -r=true master3

Reboot guest VirtualMachine:vm-5182... OK

Check if the UUID is ok. There is a known UUID bug.

[root@master1 ~]# govc vm.info master1

Name: master1

Path: /dc3/vm/OpenShift/Master Nodes/master1

UUID: 422bef48-a838-7d35-6ddd-883ec1501bad

Guest name: Red Hat Enterprise Linux 7 (64-bit)

Memory: 16384MB

CPU: 4 vCPU(s)

Power state: poweredOn

Boot time: 2019-03-09 10:22:35.770195 +0000 UTC

IP address: 10.128.0.1

Host: s42

Verify in Kubernetes and VMware guest

[root@master1 /]# kubectl describe node master1 | grep "System UUID"

System UUID: 422BEF48-A838-7D35-6DDD-883EC1501BAD

[root@master1 /]# cat /sys/class/dmi/id/product_serial

VMware-42 2b ef 48 a8 38 7d 35-6d dd 88 3e c1 50 1b ad

[root@master1 /]# cat /sys/class/dmi/id/product_uuid

422BEF48-A838-7D35-6DDD-883EC1501BAD

Configuration

Installing with Ansible also creates and configures the following files for your OpenShift vSphere environment:

- /etc/origin/cloudprovider/vsphere.conf

- /etc/origin/master/master-config.yaml

- /etc/origin/node/node-config.yaml

Verify that you have all identical files on all nodes!

Provisioning Methods

To understand my difficulty I have to provide the basics. There are two methods for vSphere storage:

- Static Provisioning

- Dynamic Provisioning

Static Provisioning

This approach worked with minor adjustments to the given OpenShift examples. This process involves the cluster administrator and OpenShift developer.

Dynamic Provisioning

The vSphere Cloud Provider plugin for Kubernetes can perform step 1 and 2 and thus dynamically provision storage only with PVCs. To accomplish that we have to define a StorageClass definition. The administrator is not involved in this procedure.

This approach has given me headaches since there were multiple pitfalls and obstacles to overcome.

Static Provisioning

These steps are the standard procedure.

- Create a virtual disk.

- Create a persistent volume PV for that disk.

- Create a persistent volume claim PVC for the PV.

- Let pod claim PVC.

Create a virtual disk

As to layout, we need to create a virtual disk on the vSphere storage. Create a virtual disk with 10 GB disk space. Ensure that the volumes dir exists on the storage. As the vmdk name, I choose jesus.vmdk because of it always funny when the cluster admin tells me that he had found Jesus.

govc datastore.disk.create -size 10G volumes/jesus.vmdk

The disk name must comply to following regex.

`[a-z](([-0-9a-z]+)?[0-9a-z])?(\.[a-z0-9](([-0-9a-z]+)?[0-9a-z])?)*`.

Check the new virtual disk.

[root@master1 ~]# govc datastore.disk.info volumes/jesus.vmdk

Name: volumes/jesus.vmdk

Type: thin

Parent:

Create a Persistent Volume

We map the new vmdk to a new persistent volume.

Create the file vsphere-volume-pv.yaml with following content:

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv0001

spec:

capacity:

storage: 2Gi

accessModes:

- ReadWriteOnce

persistentVolumeReclaimPolicy: Retain

vsphereVolume:

volumePath: "[ESX_OCP] volumes/jesus.vmdk"

fsType: ext4

storageClassName: "vsphere-standard"

Pay attention that the storageClassName is mandatory for us to make it work. We use the OpenShift CLI to create the persistent volume. OpenShift invokes Kubernetes internally.

[root@master1 ~]# oc create -f vsphere-volume-pv.yaml

persistentvolume/pv0001 created

Inspect the persistent volume:

[root@master1 ~]# oc describe pv

Name: pv0001

Labels: <none>

Annotations: <none>

Finalizers: [kubernetes.io/pv-protection]

StorageClass: vsphere-standard

Status: Available

Claim:

Reclaim Policy: Retain

Access Modes: RWO

Capacity: 10Gi

Node Affinity: <none>

Message:

Source:

Type: vSphereVolume (a Persistent Disk resource in vSphere)

VolumePath: [ESX_OCP] volumes/jesus.vmdk

FSType: ext4

StoragePolicyName:

Events: <none>

Create a Persistent Volume Claim

Create the file vsphere-volume-pvc.yaml with following content:

apiVersion: "v1"

kind: "PersistentVolumeClaim"

metadata:

name: "vinh"

spec:

accessModes:

- "ReadWriteOnce"

resources:

requests:

storage: "1Gi"

storageClassName: "vsphere-standard"

volumeName: "pv0001"

The claim name is vinh, and we map the claim to the persistence volume pv0001. Pay attention that we claim only 1 GB of 10 GB. That makes no sense, but it works. Requesting more storage than the persistent volume has, results to failure.

Create the claim:

[root@master1 ~]# oc create -f vsphere-volume-pvc.yaml

persistentvolumeclaim/vinh created

Check the claim (status pending):

[root@master1 ~]# oc describe pvc

Name: vinh

Namespace: default

StorageClass: vsphere-standard

Status: Pending

Volume: pv0001

Labels: <none>

Annotations: <none>

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 0

Access Modes:

Events: <none>

Check again (status bound, now usable):

[root@master1 ~]# oc describe pvc

Name: vinh

Namespace: default

StorageClass: vsphere-standard

Status: Bound

Volume: pv0001

Labels: <none>

Annotations: pv.kubernetes.io/bind-completed=yes

Finalizers: [kubernetes.io/pvc-protection]

Capacity: 10Gi

Access Modes: RWO

Events: <none>

We created a pv and claimed it with the pvc.

[root@master1 ~]# oc get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv0001 10Gi RWO Retain Bound default/vinh vsphere-standard 19m

[root@master1 ~]# oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

vinh Bound pv0001 10Gi RWO vsphere-standard 23s

Alternatively, output the information in YAML:

[root@master1 ~]# oc get pvc -o yaml

The response:

apiVersion: v1

items:

- apiVersion: v1

kind: PersistentVolumeClaim

metadata:

annotations:

pv.kubernetes.io/bind-completed: "yes"

creationTimestamp: 2019-03-09T15:23:13Z

finalizers:

- kubernetes.io/pvc-protection

name: vinh

namespace: default

resourceVersion: "23390760"

selfLink: /api/v1/namespaces/default/persistentvolumeclaims/vinh

uid: 4101be02-427f-11e9-8b17-005056ab11cb

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 1Gi

storageClassName: vsphere-standard

volumeName: pv0001

status:

accessModes:

- ReadWriteOnce

capacity:

storage: 10Gi

phase: Bound

kind: List

metadata:

resourceVersion: ""

selfLink: ""

Dynamic Provisioning

The OpenShift Container Platform persistent volume framework enables dynamic provisioning and allows administrators to provision a cluster with persistent storage. The framework also gives users a way to request those resources without having any knowledge of the underlying infrastructure.

Storageclass Definition

To perform dynamic provisioning, we need a definition for a default storage. Therefore we use the annotation storageclass.kubernetes.io/is-default-class: "true". Check for a similar StorageClass definition.

[root@master1 ~]# oc get sc -o yaml

apiVersion: v1

items:

- apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

annotations:

storageclass.kubernetes.io/is-default-class: "true"

creationTimestamp: 2019-03-09T17:13:20Z

name: vsphere-standard

namespace: ""

resourceVersion: "23402534"

selfLink: /apis/storage.k8s.io/v1/storageclasses/vsphere-standard

uid: a369d9f1-428e-11e9-8b05-005056ab11cb

parameters:

datastore: ESX_OCP

diskformat: thin

provisioner: kubernetes.io/vsphere-volume

reclaimPolicy: Delete

volumeBindingMode: Immediate

kind: List

metadata:

resourceVersion: ""

selfLink: ""

Perform Persistence Volume Claim

Create a persistence volume claim in vsphere-volume-pvc.yaml:

kind: PersistentVolumeClaim

apiVersion: v1

metadata:

name: pvc0001

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 2Gi

The describe shows our problem:

[root@master ~]# oc describe pvc

Name: pvc0001

Namespace: default

StorageClass: vsphere-standard

Status: Pending

Volume:

Labels: <none>

Annotations: volume.beta.kubernetes.io/storage-provisioner=kubernetes.io/vsphere-volume

Finalizers: [kubernetes.io/pvc-protection]

Capacity:

Access Modes:

Events:

Type Reason Age From

---- ------ ---- ----

Warning ProvisioningFailed 5s (x11 over 2m) persistentvolume-controller

Message

-------

Failed to provision volume with StorageClass "vsphere-standard": No VM found

There are two significant bugs open with the dynamic provisioning:

- https://github.com/kubernetes/kubernetes/issues/65933

- https://github.com/kubernetes/kubernetes/issues/71502

Troubleshooting

For our troubleshooting we use jq - a JSON processor. We use it to extract only relevant information.

curl -LO https://github.com/stedolan/jq/releases/download/jq-1.6/jq-linux64

chmod +x jq-linux64

cp jq-linux64 /usr/bin/jq

Check Nodes

This oc command is identical with the kubectl command.

oc get nodes

Checking with kubectl all exisiting nodes in the OpenShift cluster and their respective roles.

[root@master1 ~]# kubectl get nodes

NAME STATUS ROLES AGE VERSION

worker1 Ready compute 116d v1.11.0+d4cacc0

worker2 Ready compute 116d v1.11.0+d4cacc0

worker3 Ready compute 116d v1.11.0+d4cacc0

master1 Ready infra,master 116d v1.11.0+d4cacc0

master2 Ready infra,master 116d v1.11.0+d4cacc0

worker4 Ready compute 116d v1.11.0+d4cacc0

worker5 Ready compute 116d v1.11.0+d4cacc0

master3 Ready infra,master 116d v1.11.0+d4cacc0

Check Provider-Id

We check for the provider id that should contain the UUID equivalent to the system UUID.

[root@master1 ~]# kubectl get nodes -o json | jq '.items[]|[.metadata.name, .spec.providerID, .status.nodeInfo.systemUUID]'

[

"worker1",

null,

"422B372C-F162-0591-B032-69FFB9165D5F"

]

[

"worker2",

null,

"422B5C03-90EA-D222-90AD-81112FA21073"

]

[

"worker3",

null,

"422B2637-AB67-BF90-937A-3CF30824EC8D"

]

[

"master1",

null,

"422BEF48-A838-7D35-6DDD-883EC1501BAD"

]

[

"master2",

null,

"422B256E-E5D5-1877-98C3-4B339941006A"

]

[

"worker4",

null,

"422B13EA-23D7-CF07-5C7A-70E132A25684"

]

[

"worker5",

null,

"422BF424-FF37-FC35-507B-4A6AFF733F56"

]

[

"master3",

null,

"422B2955-3E6F-0A61-75C5-31A0E5B51135"

]

Patch Provider Id

As the output is null for the provider id, we are going to patch it manually.

Check the current node and memorise the system UUID.

kubectl describe node $(hostname)

Patch the node with the memorized UUID.

# on master1

kubectl patch node $(hostname) -p '{"spec":{"providerID":"vsphere://422BEF48-A838-7D35-6DDD-883EC1501BAD"}}'

Repeat that for every node. After the patch, the dynamic provisioning still didn't work.

Check logs

Search in /var/log/containers/*.log for datacenter.go, vsphere.go and pv_controller.go. You only see those messages by increasing the log verbosity of the OpenShift platform. See below a prettified message stack of stderr.

{"log":"datacenter.go:78 Unable to find VM by UUID. VM UUID:"}

{"log":"nodemanager.go:414 Error No VM found node info for node master1 not found"}

{"log":"vsphere_util.go:134 Error while obtaining Kubernetes node nodeVmDetail details. error : No VM found"}

{"log":"vsphere.go:1160 Failed to get shared datastore: No VM found"}

{"log":"pv_controller.go:1464 failed to provision volume for claim default/pvc0001

with StorageClass vsphere-standard: No VM found"}

{"log":"event.go Event(v1.ObjectReference{Kind:'PersistentVolumeClaim',

Namespace:'default', Name:'pvc0001', UID:'b063844c-3ffe-11e9-8fc9-005056ab11cb',

APIVersion:'v1', ResourceVersion:'22900438', FieldPath:''):

type: 'Warning' reason: 'ProvisioningFailed'

Failed to provision volume with StorageClass 'vsphere-standard',: No VM found"}

Examine vsphere.conf

We could rule out the UUID bug. Check the cloud provider configuration.

[root@master1 ~]# cat /etc/origin/cloudprovider/vsphere.conf

[Global]

user = "vcenter-user"

password = "vcenter-password"

server = "vsphere.mydomain.ch"

port = 443

insecure-flag = 1

datacenter = dc3

datastore = ESX_OCP

working-dir = "/dc3/vm/OpenShift/"

[Disk]

scsicontrollertype = pvscsi

The working-dir seems suspicious. Check it with govc:

[root@master1 ~]# govc ls /dc3/vm/OpenShift/

/dc3/vm/OpenShift/Master Nodes/

/dc3/vm/OpenShift/Application Nodes/

The problem is that there no recursive mechanism. Our vm nodes are in the sub-directories Master Nodes and Application Nodes. The directory name contains a space, which also seems problematic if you don't use proper escapes. I just followed my hunch and let the VMware administrators move the vm nodes to the right directory. Therefore we had to shut down the whole OpenShift Container Platform first.

Retry claim

After the reboot, the dynamic provisioning works. The persistence volume claim exists and works.

[root@master1 ~]# oc get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc0001 Bound pvc-548e75c0-4426-11e9-ac8a-005056ab11cb 2Gi RWO vsphere-standard 18h

vinh Bound pv0001 10Gi RWO vsphere-standard 2d

If we look closer in pvc0001:

[root@master1 ~]# oc describe pvc/pvc-548e75c0-4426-11e9-ac8a-005056ab11cb -o yaml

Name: pvc-548e75c0-4426-11e9-ac8a-005056ab11cb

Labels: <none>

Annotations: kubernetes.io/createdby=vsphere-volume-dynamic-provisioner

pv.kubernetes.io/bound-by-controller=yes

pv.kubernetes.io/provisioned-by=kubernetes.io/vsphere-volume

Finalizers: [kubernetes.io/pv-protection]

StorageClass: vsphere-standard

Status: Bound

Claim: default/pvc0001

Reclaim Policy: Delete

Access Modes: RWO

Capacity: 2Gi

Node Affinity: <none>

Message:

Source:

Type: vSphereVolume (a Persistent Disk resource in vSphere)

VolumePath: [ESX_OCP] kubevols/kubernetes-dynamic-pvc-548e75c0-4426-11e9-ac8a-005056ab11cb.vmdk

FSType: ext4

StoragePolicyName:

Events: <none>

The virtual disk kubernetes-dynamic-pvc-548e75c0-4426-11e9-ac8a-005056ab11cb.vmdk was automatically created. Dynamic Provisioning has some advantages over static provisioning. For one as mentioned, you don't have to bother about the underlying infrastructure. Depending on your point of view, another advantage is the automatic creation and deletion of the virtual disk and persistence volume through the persistence volume claim. Better ensure that you don't need the data if you are going to delete a persistence volume claim.

Undo Provisioning

This provisioning explication was an experimental proof of concept. To undo the storage provisioning use following commands:

# delete claim first

oc delete pvc vinh

# delete pv

oc delete pv pv0001

# delete vmdk

govc datastore.rm -f volumes/jesus.vmdk

# delete dynamic provision claim

oc delete pvc pvc0001

Summary

The OpenShift Container Platform by Red Hat utilises Kubernetes and Docker. Running OCP on VMware vSphere is ok. Static Provisioning of persistent storage works. Dynamic Provisioning of storage is a little bit more challenging since you have to examine the interaction between Kubernetes and the vSphere Cloud Provider. Overall it feels like an Odyssey; you have to gather information on three major products and have to discover the logging data. In the end, I have learned a lot. Troubleshooting and analysing logs are essential. Without Elasticsearch it is a cumbersome task.

Literature

A collection of documentation resources:

- OpenShift Architecture: Storage

- OpenShift Platform: Configuring vSphere

- Kubernetes vSphere Volume

- VMware vSphere Storage for Kubernetes

- VMware vSphere Disk Formats

- OpenShift Platform: Dynamic Provisioning vSphere

- GitHub Kubernetes vSphere Examples

- vSphere Cloud provider and VMDK dynamic provisioning