Process Automation with Flowable in the Broadcasting Industry - Part 1

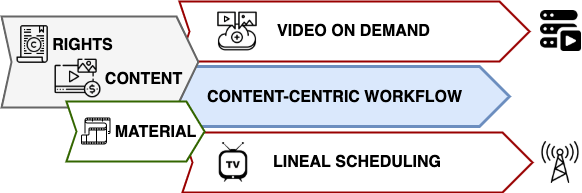

The broadcasting industry is one of the industries that has evolved the most in recent years. In just under 10 years, it has gone from having workflows in which some physical material was exchanged, to being completely digital and content-centric workflows. But now, thanks to platforms such as Netflix or Amazon Prime Video, this industry needs to be more agile when it comes to adapting to what its audience is demanding.

With the help of a flow manager, television networks or channels, video-on-demand (VOD), broadcasters or telecommunications service providers, can manage their workflows, and with it, they can be faster when it comes to make changes, and adjust to what your audience is claiming.

In content-centric workflows, broadcasters decide how and when to make content available to the viewer. The content can be released on several different services and platforms, as well as on linear television or radio channels. Some only manage services on demand, while others only manage linear channels. And obviously, those who have the capacity, manage both to give maximum value to their content.

In this article, we will review at a general level the different business processes involved in Broadcasting services, and then move on to talk about the technical solution, in which we show how Flowable can help in automating the ingestion of new material.

Figure #1: Broadcasting Workflow

It all begins with the acquisition of audiovisual material that can be movies, series, or live programs to mention some. Normally the material is acquired after viewing a pilot or demo program that was sent by the production houses. Once acquired, a record is created in the digital content library (Sintec OnAir for example), and descriptive metadata is added to each one, as well as their respective transmission rights and the medium in which those transmission rights are valid (linear television, catch-up and/or VOD).

The purchase or a new material, is also the trigger for two important things. One of them is that those in charge of making the different programming list of the material according to the service or platform, can already include it in the drafts. For example, in linear television, those in charge of programming the daily playlists may already include this material in drafts.

The second action that is triggered, signals the production houses to send the recently purchased material. Usually, the shipment is made by some means of digital transport, either by hard drives, transmission protocols (FTP), or by a transfer accelerator (Aspera).

The drafts of the different schedules allow the quality control operators to prioritize the order in which the material will be stored internally (in a MAM for example). Likewise, the versioning of the material is prioritized depending on the region in which it is going to be transmitted.

From here, the workflow changes depending on the service or platform where the material is published. In a following article, we will analyze in detail the workflows to prepare audiovisual material as it is used for linear television or for on-demand services.

The Architecture

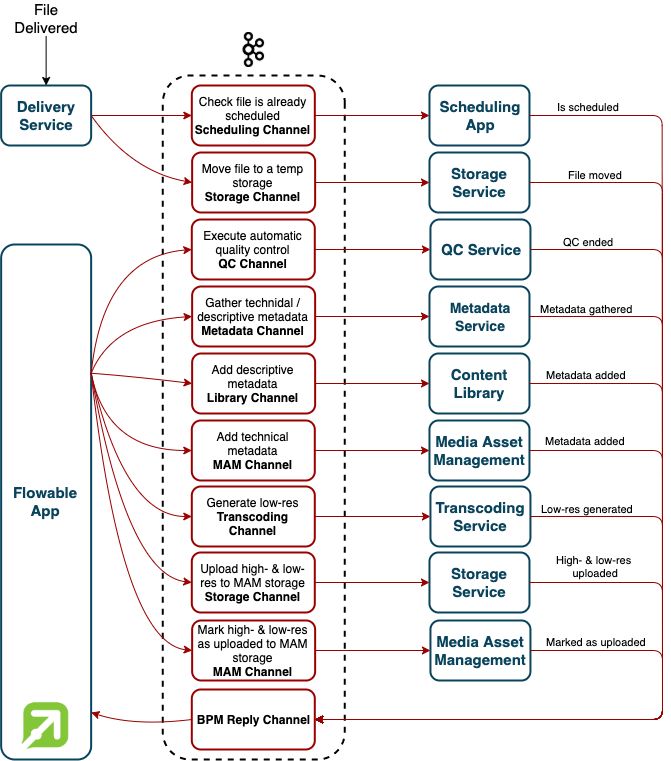

Figure #2: Architecture

The architecture follows the design pattern known as saga, and where Flowable is the orchestrator of everything, having the responsibility of telling each participant what to do and when, offering flexibility to adapt the solution to the needs of the business at all times and define business transactions and rollback mechanisms in case of error.

And in the center of the architecture, we find Kafka as a means of transmitting the messages for the different events. Thanks to this piece we decouple the microservices and adopt the reactive programming paradigm, enabling the business to react to any event.

Additionally, we have the following microservices:

- Delivery Service: For our example, this micro detects the delivery of some audiovisual material. But in practice, the micro is also in charge of delivering material.

- Scheduling App: Application that a user uses to create the daily playlists of a linear channel.

- QC Service: Service that executes an automated quality control on some material, and that has been previously configured.

- Metadata Service: Service that collects technical and descriptive information about some material.

- Transcoding Service: Micro in charge of converting the material from one format to another.

- Storage Service: Service in charge of managing local and cloud storage.

- Content Library: Third-party application where descriptive information about the materials is stored.

- Media Assets Management (MAM): Third-party application where you can manage physical material, view associated technical information, and preview a low-resolution copy of it.

- Flowable App: Spring Boot application with built-in Flowable engine to manage workflows.

Note: The message exchange described below is for the case where the intake of the new material is expected to flow without any problem.

When a new material is received by Delivery Service, it sends two messages. The message Move file to a temp storage causes the micro Storage Service to move the received file to a higher capacity temporary storage. When finished, it sends the message File moved.

The second message sent is the Check file is already scheduled, which is detected by Scheduling App. The Scheduling App analyzes whether this material is already added to a daily playlist, regardless of whether it is a draft or final. If it is, it sends an Is scheduled message.

When the messages Check file is already scheduled and Is scheduled are detected, the micro Flowable App starts the material ingestion workflow.

As a first step, Flowable App sends Execute automatic quality control and Gather technical / descriptive metadata messages. The first message is captured by the micro QC Service, which upon completion of the automated quality control, sends back the message QC ended. The second message is received by the micro Metadata Service. When it finishes collecting the technical and descriptive information, the message Metadata gathered is sent.

Upon confirming the QC operator that the material is OK and has successfully passed the quality control, Flowable App continues to send the Add descriptive metadata and **Add technical metadata * messages for the descriptive information to be added to the Content Library (Content Library), and the technical to the asset manager (Media Assets Management - MAM).

At the same time Flowable App tells the micro Transcoding Service to generate a copy of the original file in low resolution using the message Generate low-res. The reception of the message Low-res generated by Flowable App, indicates that the low-res has been created, and that both the high- and low-resolution files should be uploaded to their final storage.

The commands Upload high - and Upload low-res to MAM storage sent by Flowable App, order the micro Storage Service to upload them to its final storage. Depending on the size of the files, this may take a few minutes, so when finished, the micro Storage Service should send the messages High - and low-res uploaded.

Finally, it is important that the Media Assets Management knows the exact location of the files, so that it can be retrieved and sent them to the different service providers that need it. This is why Mark high - and low-res as uploaded to MAM storage messages are sent.

Acquired Material Ingestion

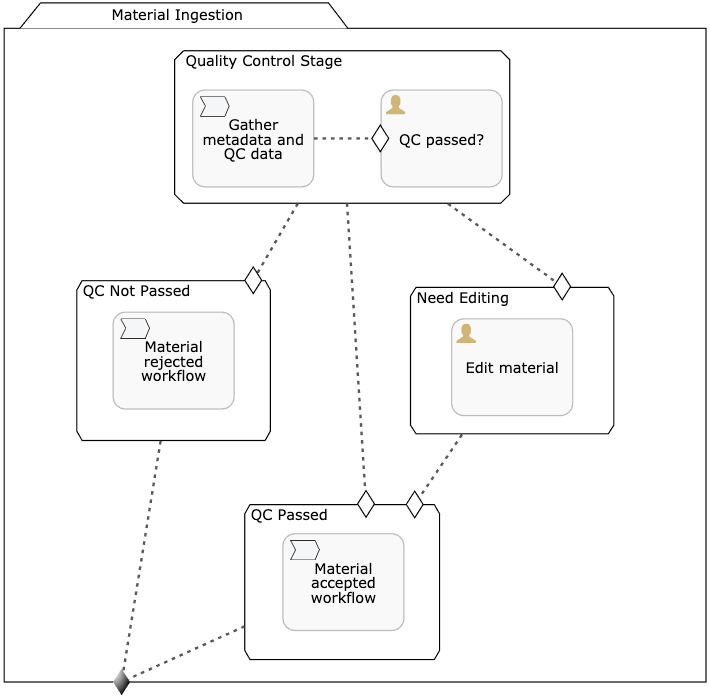

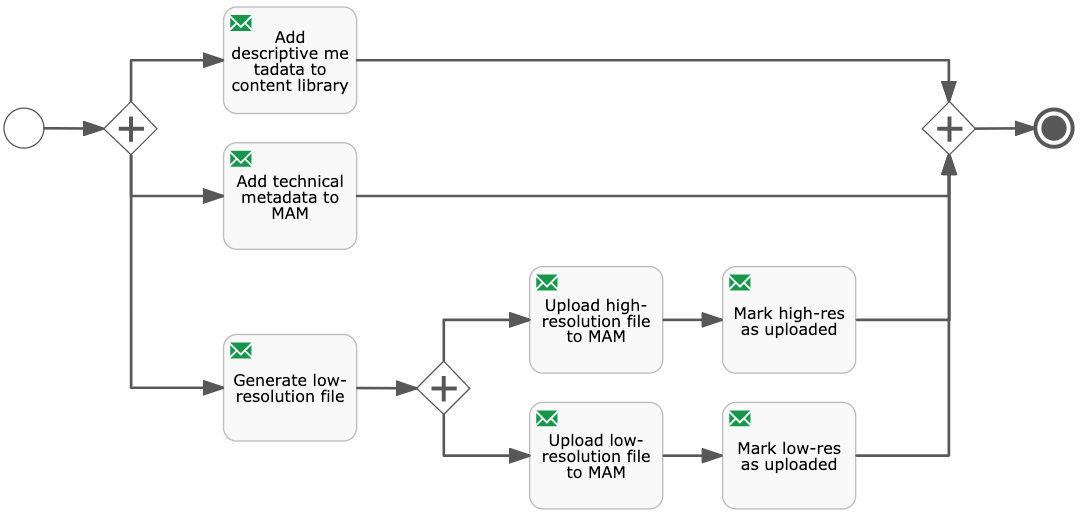

Figure #3: Case: Material Ingestion

The material ingestion workflow is very simple. In the "Quality Control Stage" phase, descriptive and technical information about the material is collected (Metadata Service), and an automated analysis is also passed to detect errors (QC Service) - see sub-process Gather Material Metadata and Automatic QC. Once finalized, a QC operator is the one who determines if the material is suitable for transmission or not, or if it needs editing.

Figure #4: Process Metadata collection and automatic quality control

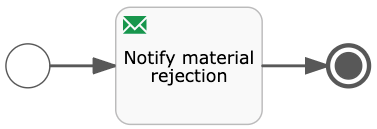

If for some reason, the material has a defect such as a drop-frame, it goes to the "QC Not Passed" phase - see sub-processes Material Ingestion Rejection. For demo purposes, at this stage it is simply notified that the material has been rejected, but it could automatically request the distribution house to send the material again.

Figure #5: Material Ingestion Rejection

On the other hand, sometimes the material received requires some editing, in these cases, the workflow moves on the "Need Editing" phase. Being in this phase, the QC operator will for sure edit the original file using an editing tool, resulting in a new file, which is considered as having passed the quality control.

Lastly, we move on to the "QC Passed" phase. It is in this phase, where the previously collected information is added to the Content Library, and to the _Media Assets Management (MAM) _. At the same time, the Transcoding Service service generates a lower resolution copy of the original file.

Figure #6: Material Accepted Process

Finally, the Storage Service service uploads both the high- and low-resolution files to the storage used by the Media Assets Management (MAM), and with it, making it available to it.

Summary

In this article, and taking the ingesting acquired material workflow as an example, we have shown what an architecture with multiple microservices can be like for the Broadcasting industry, using Flowable as a process manager, and Kafka to send messages between different services.

Likewise, we have been able to see how thanks to the orchestration carried out by Flowable, several tasks can be automated, allowing the user have more time for tasks that cannot really be automated, and interact with the system only when strictly necessary.

In our next article, we will analyze in detail the workflows for preparing audiovisual material as it is used for linear television or for on-demand services. And we will see the role that Flowable can occupy in the automatic publication of material depending on the service or platform.

The source code can be found in GitHub.