Testing, the little, annoying sister of coding

Is testing like the little sister we have to take everywhere, but nobody wants to have her with us? What is our experience with software testing? Testing is annoying, time-consuming and is often omitted because time is running out at the end of a project. Some may ask the provocative question, why should we test at all? Is the benefit of testing higher than the cost?

Why Testing at ALL?

Because of the increasing complexity of a software product, no individual can have an overview of all the functions, so a structured test process is urgently needed. (We love what we do!) In addition, a high quality provides a competitive advantage of the company in the market. But most of all, testing creates much confidence in the software for both the stakeholder and the developer. In fact, testing reduces more costs than it causes.

Who Should Test?

Why is testing so unpopular for the developers? Is it really because basically developers and testers are pursuing different goals: developers want to create something, but software testers want to destroy something? Not really! Even software testers create something, namely the quality of the product. But it makes sense to separate the two roles, developer and tester. Because the developer is usually 'blind' to his own mistakes and will often test his component only in terms of requirements. Whereas the software tester is more objective because he is more independent of the code.

Component tests or static tests are created by the developers themselves, because they test small units and are code-near created. The developers should strive for checking the features of the others whenever possible. But acceptance tests, which check the criteria of the requirements, should be performed by an independent tester. Ideally, developers and testers complement each other. A sensitive approach on the part of the tester is needed, i.e. errors should always be factually reported back and not personally.

When test?

Here is the rule: the sooner the better.

A defect can be found throughout all the phases of software development Life Cycle. So, it is better to start testing in an early stage, so as to minimize defects in the later stages. The software tester should already be involved in requirement engineering in order to determine the acceptance criteria, which he has to check later. At this stage, errors (such as logical ones) should already be found in reviews and fixed. The building of test cases should start along with the development.

The tester should be involved in all phases of the project.

What test methods?

There are many different types of tests. Which how and when are used can vary from project to project. Mainly are performed

- Component Test: Test at the code level, where the function of each component is tested. These tests are highly recommended, because errors can be fixed in the early stage.

- Integration Test: All subsystems of the system are tested for compatibility.

- Acceptance Test: The functions of the system are checked against the acceptance criteria

Agile software development supports the process of the earliest possible testing. While e.g. with the waterfall model testing is performed at the very end, because by then the software is available, testing in agile development can be integrated into every sprint because new components are finished.

Integration of testing in a sprint

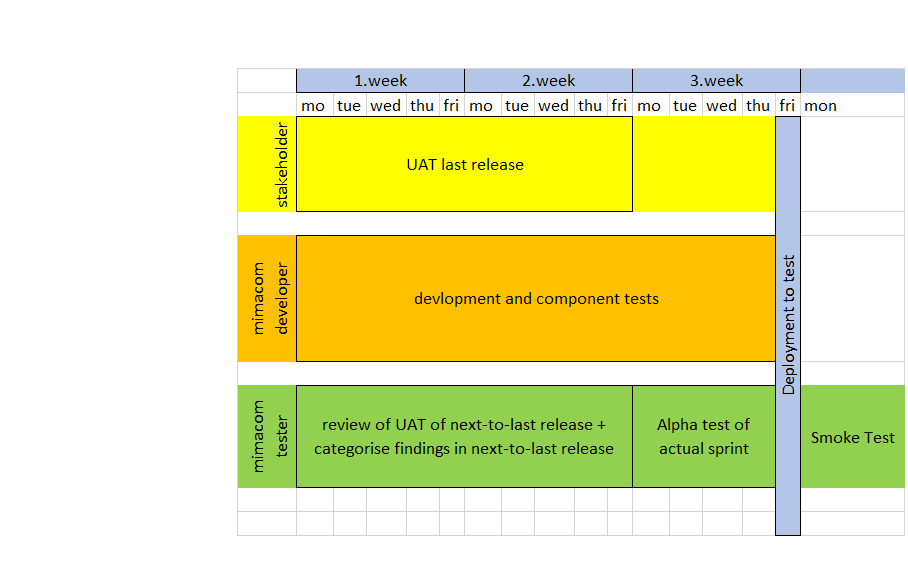

We have integrated the test process into a 3-week sprint like this:

Glossary:

Glossary:

- UAT: User Acceptance Test: The functions of the system are checked in a users test environment against the acceptance criteria by the user

- Alpha Test: The functions of the system are checked against the acceptance criteria in a developers test environment by a tester

- Smoke Test: The main functions of the system are checked for working

The test cycle is as follows:

- The mimacom developer creates component tests for the new components in the current sprint.

- The new components are additionally verified by mutual testing.

- At the end of the sprint, the mimacom tester checks the components of the current sprint in the development environment (Alpha Test). This ensures that functional results are delivered to the stakeholder.

- At the end of the sprint, the new release will be provided.

- The mimacom tester tests the release in a smoke test.

- The stakeholder executes a user acceptance test (UAT) of the release in the next sprint.

- The mimacom tester performs a review of the test results of the Stakeholder's UAT.

- The mimacom tester categorizes the findings and adds them if necessary to the backlog

How can we further improve the test process?

To prevent testing from looping, where test-bugfix-retest is repeated endlessly, it makes sense to determine test exit criteria . Though we should give up the idea that a software can be tested completely error-free! So the absence of all errors can't be a serious exit criteria for tests. The fulfillment of the acceptance criteria as test end is obvious, but this must be clarified with the stakeholder.

Then we should consider which selection of test cases form the regression tests. Regression tests make sure, that a change hasn’t broken any existing functionality.

With further support, the little sister will continue to grow up and we'll see how lucky we are to have her.